Machine Learning

Bayesian Methods for Ecological and Environmental Modelling

UKCEH Edinburgh

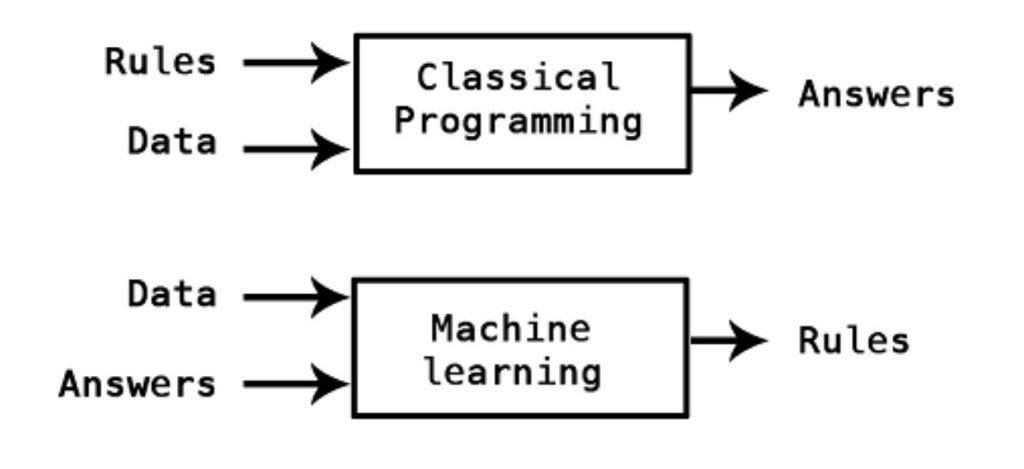

Conventional statistical modelling: we declare

- the model, f (the mathematical form of the variables x)

- the algorithm estimates the parameters, \(\theta\)

Machine Learning: we declare

- the variables x (“features”) to use

- the algorithm estimates parameters \(\theta\) and the model f

Machine Learning

Machine Learning

https://xkcd.com

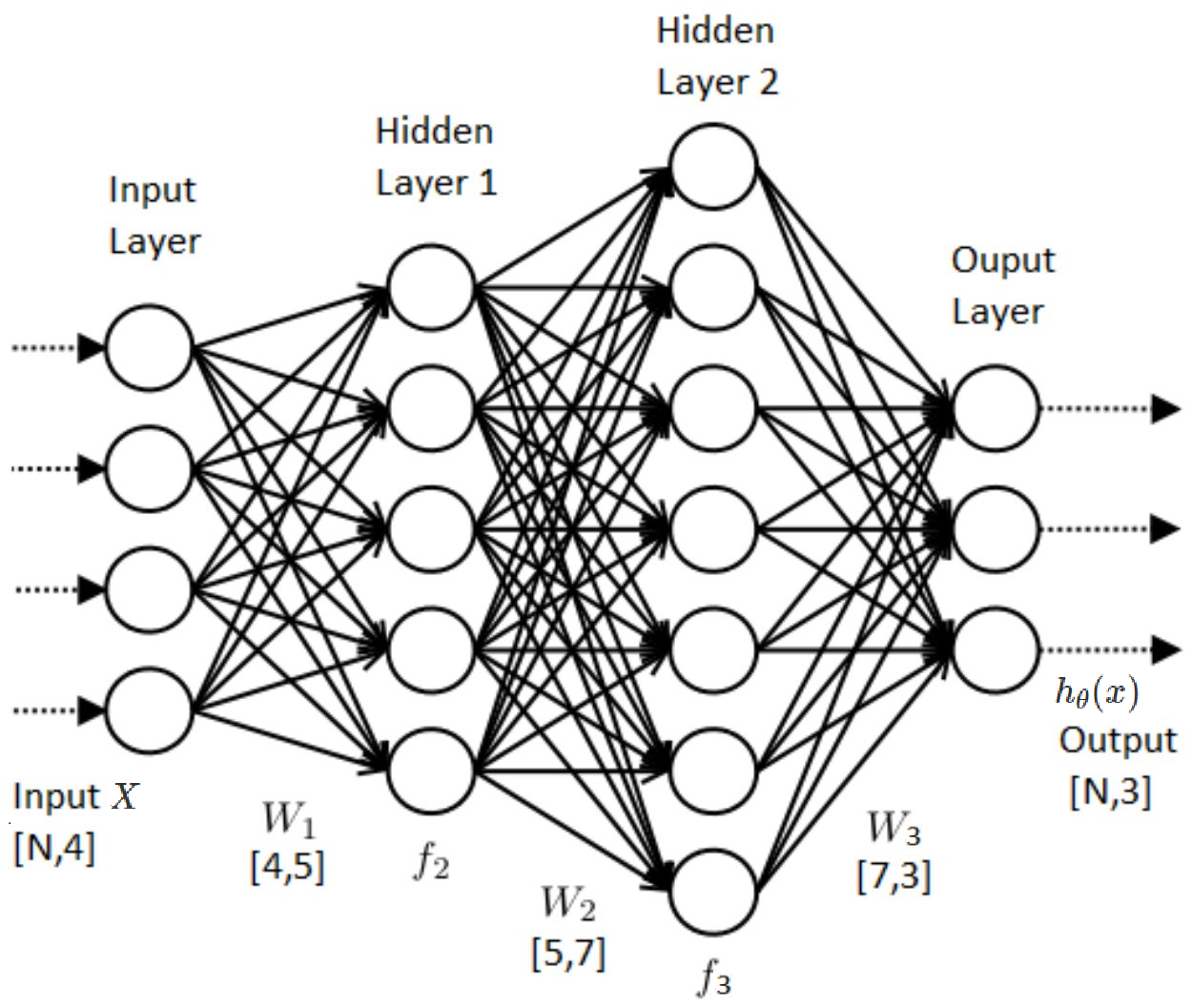

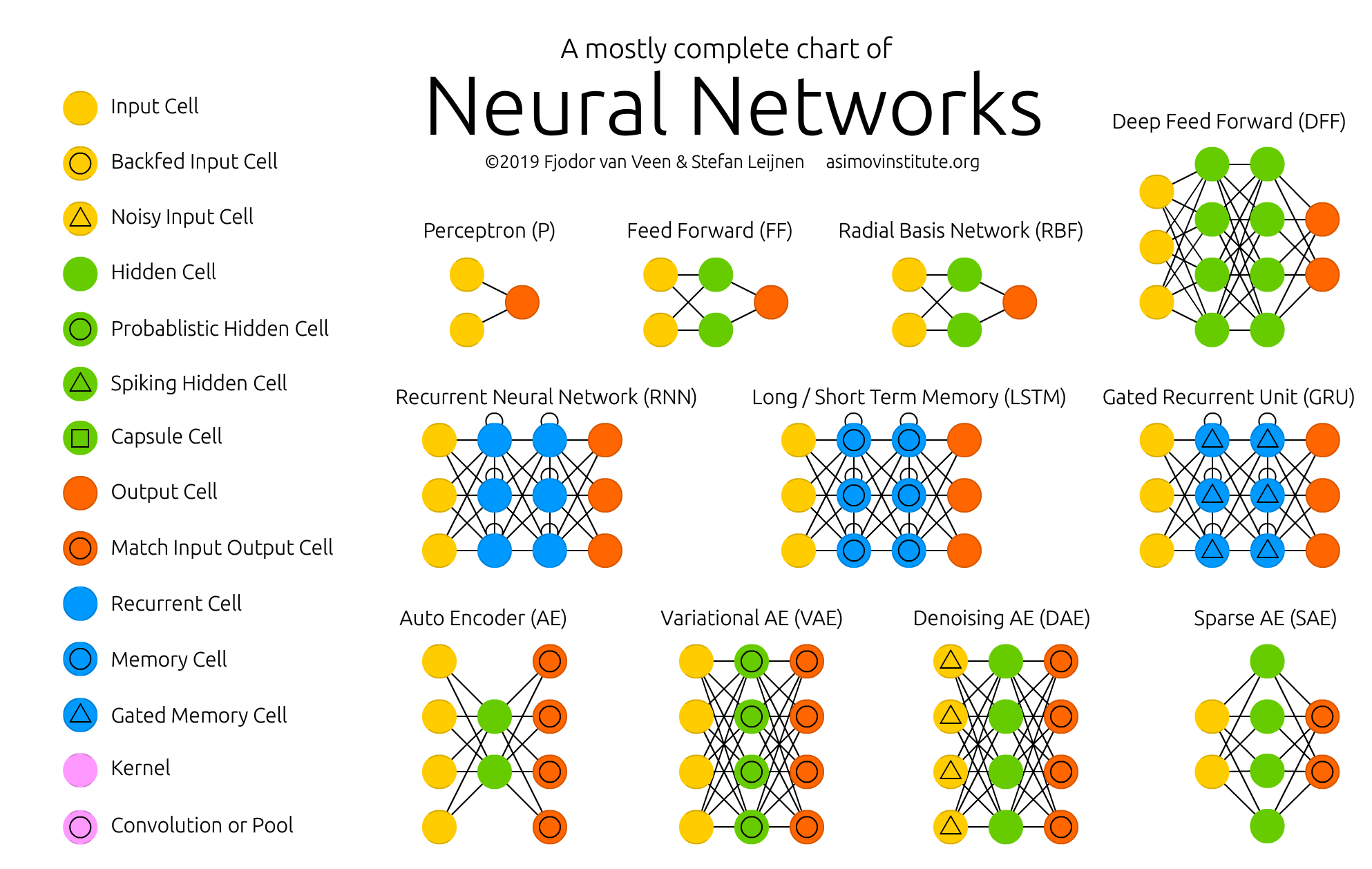

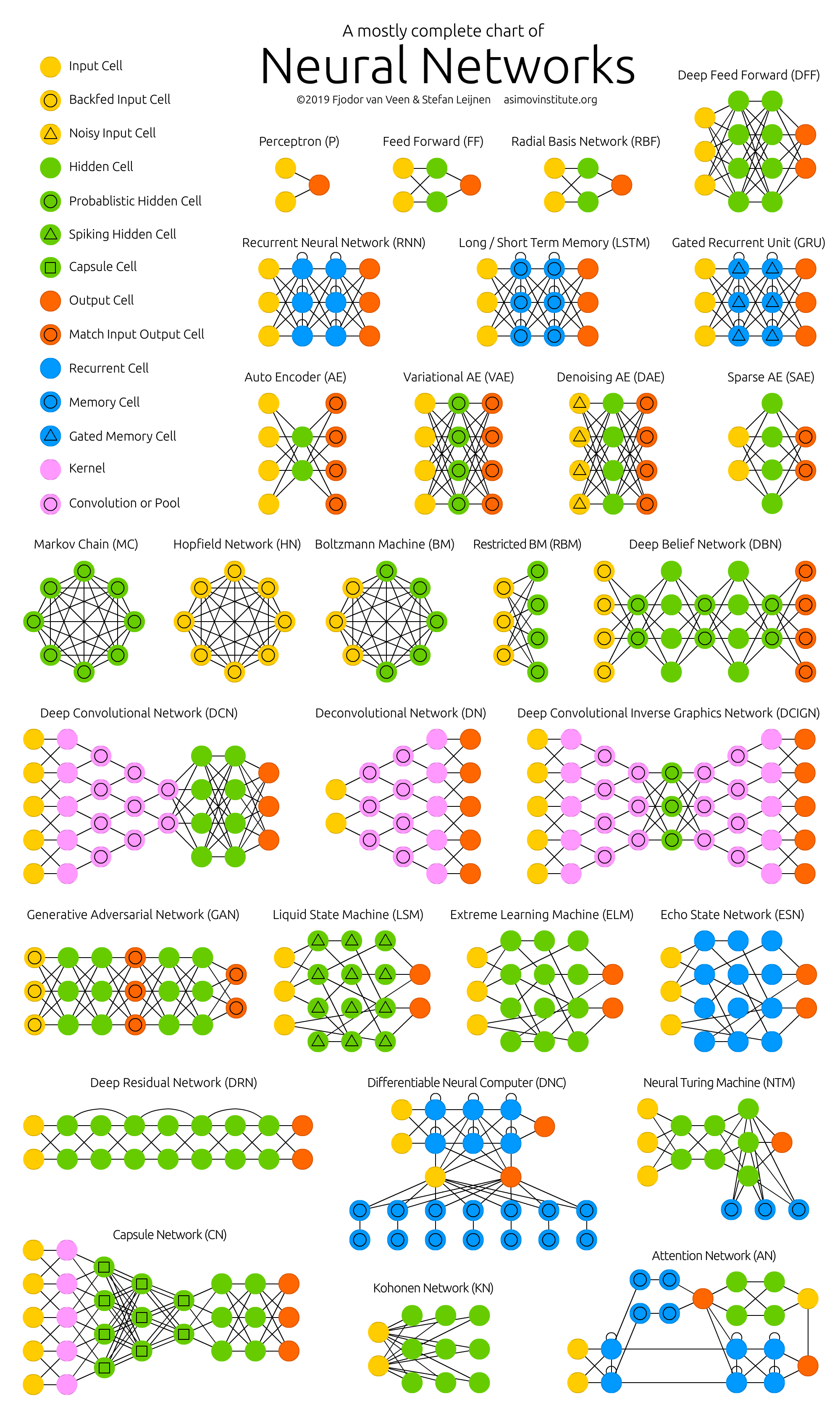

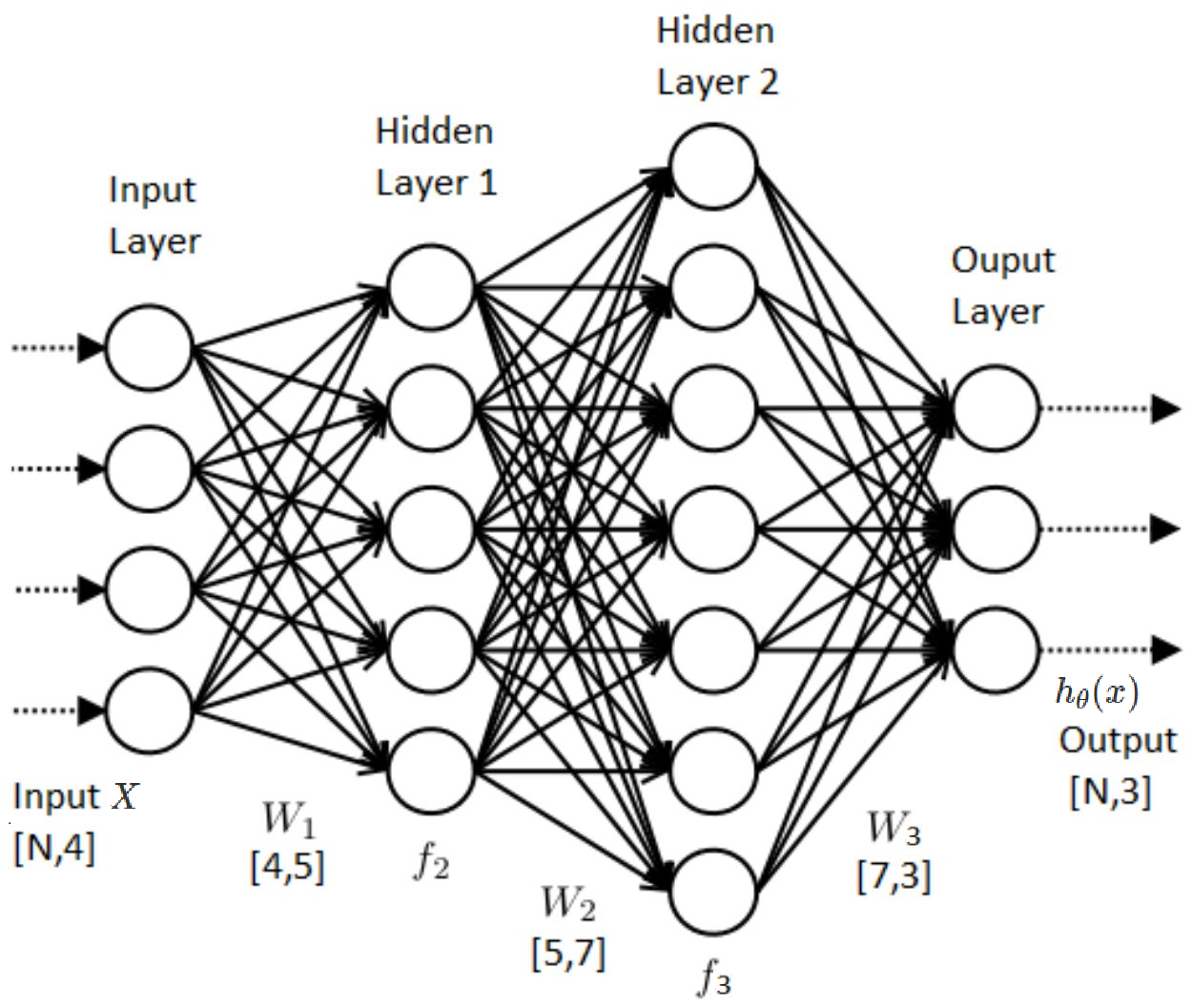

Neural Networks

- inputs = covariates

- outputs = response variables

- layers = GLM model predictions

Neural Networks

- Highly flexible, often uninterpretable

- Still just a model, but typically have thousands of parameters

- Not easy to do MCMC computation

Neural Networks

Ideally, we want to:

- keep flexibility of ML

- quantify uncertainty

- incorporate prior knowledge

Some promising approaches

- Bayesian Additive Regression Trees (BART)

- Bayesian Adaptive Spline Surfaces (BASS)

- Bayesian Gaussian Process Regression

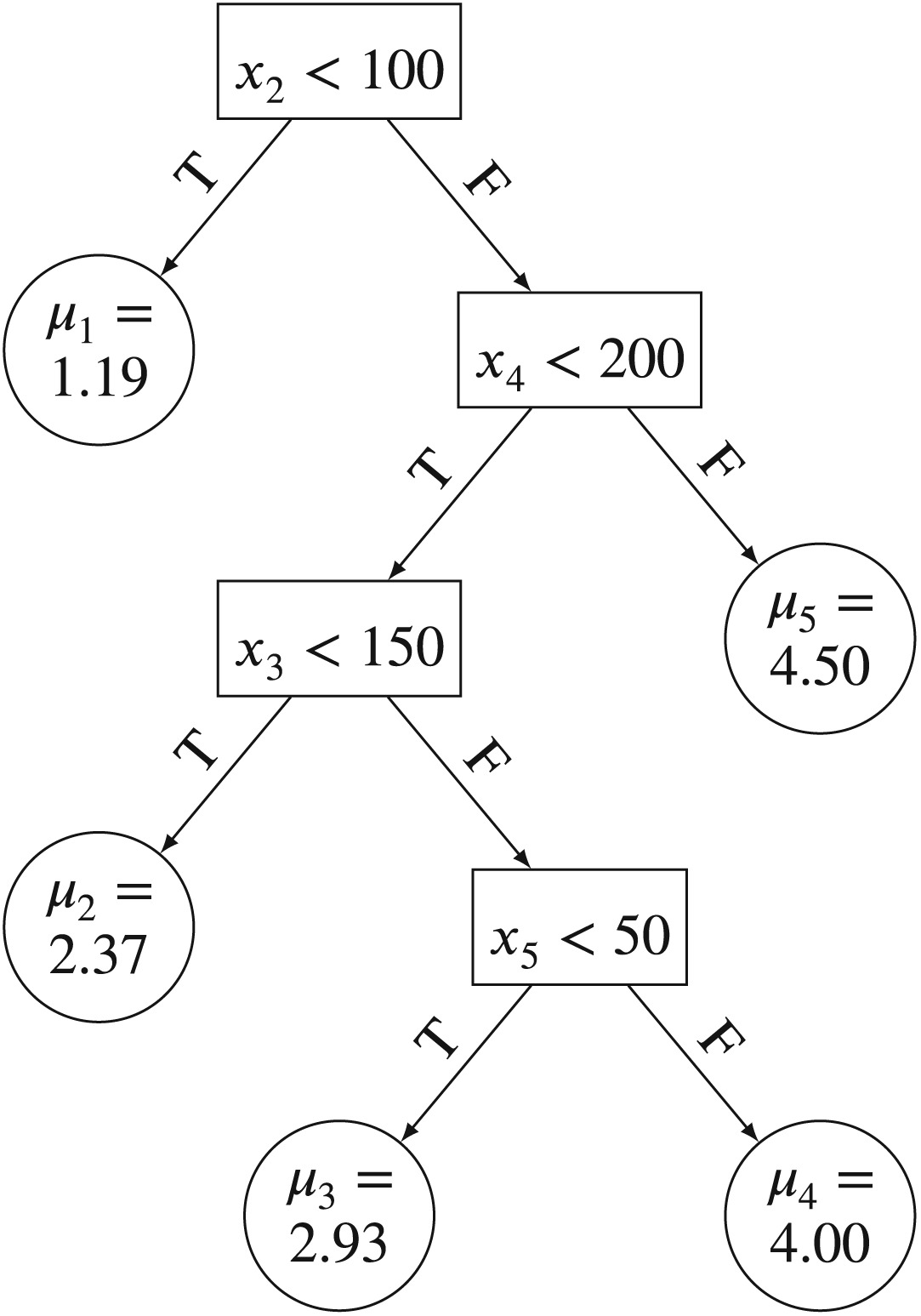

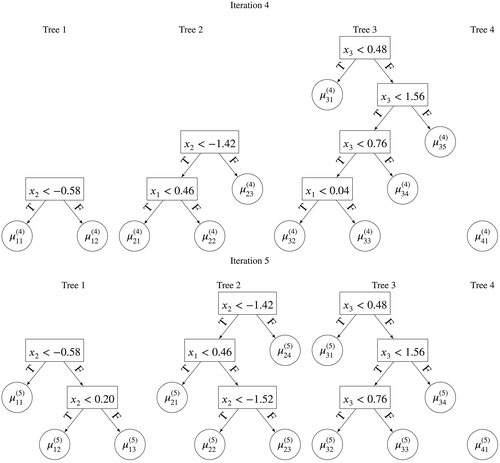

Bayesian Additive Regression Trees (BART)

Related to random forests, gradient boosted methods, GAMs

Bayesian Additive Regression Trees (BART)

https://onlinelibrary.wiley.com/doi/abs/10.1002/sim.8347

BART formal definition

\[y = \sum_{j=1}^m g(X, T_j, M_j) + \epsilon\]

- \(m\) is the number of trees (fixed)

- \(X\) is a matrix of covariates

- \(T_j\) is a vector of binary split decision rules

- \(M_j\) is a vector of terminal node weights

- \(g()\) is a look-up function

- \(\epsilon \sim N(0, \sigma^2)\) is an error term

Bayesian Additive Regression Trees (BART)

Very simple to implement:

Try this in the next practical (time permitting) …

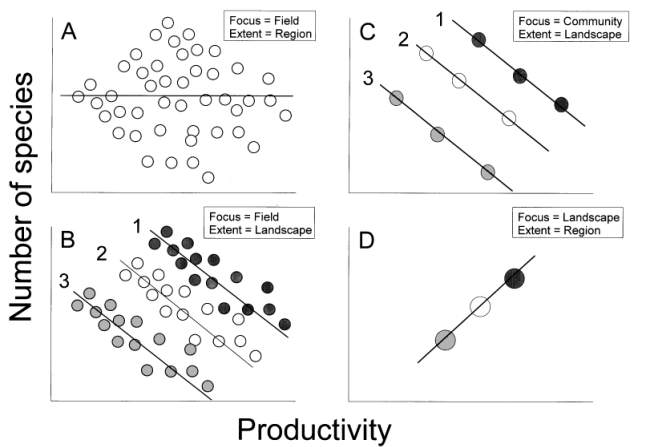

The perils of machine learning

- avoids using prior knowledge of causation

- depends critically on cross-validation

- “out-of-sample” predictions are really a sub-sample of the data

- “pseudo-out-of-sample” predictions

- depends critically on “Big Data”

- i.e. representative sample of population to be predicted

- requires uncertainty quantification

Observations are not enough

Heliocentric model

Geocentric model

Both models match observations but which is more likely?

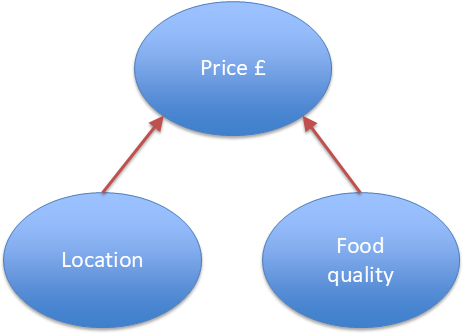

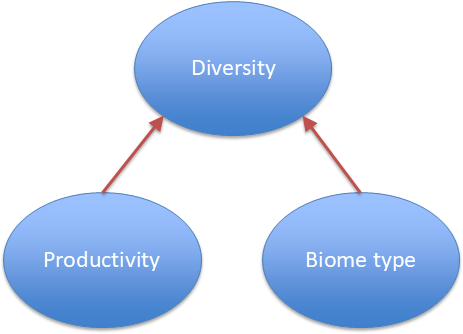

Causal inference

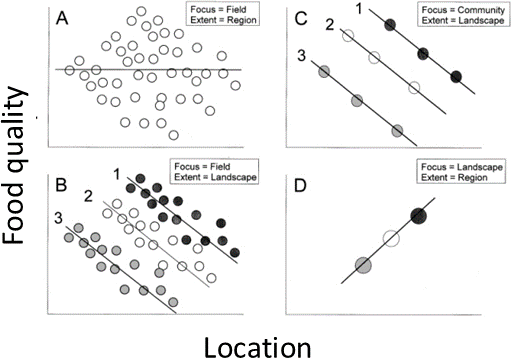

Simpson’s paradox

Causal information needed to make sense of the data

Causal inference

Simpson’s paradox

Causal information needed to make sense of the data

Causal inference

Simpson’s paradox

Causal information needed to make sense of the data

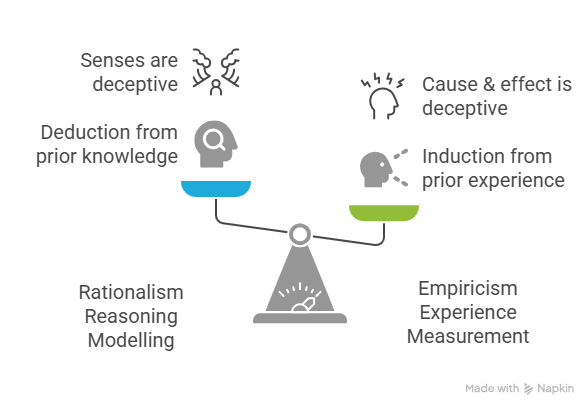

Is machine learning science?

Science, from the Latin:

- verb sciere to know

A systematic process for gaining knowledge about the real world.

How do we know anything?

How do we know anything?

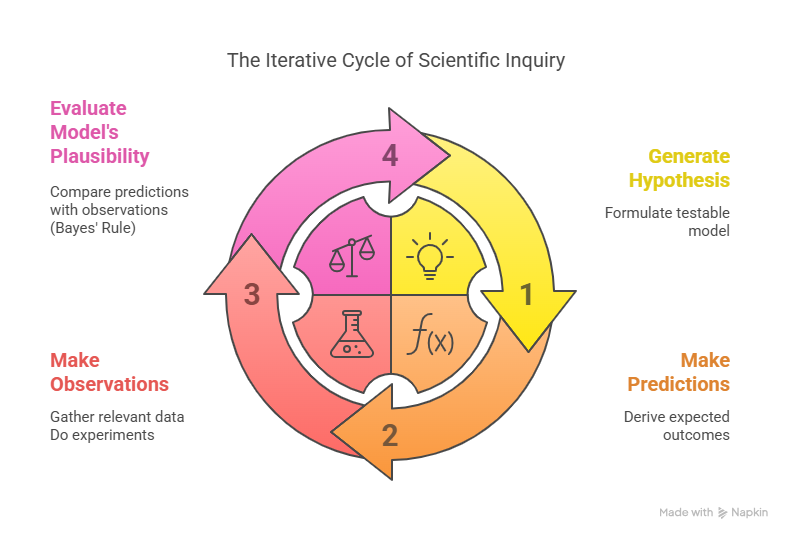

How science works

Summary

- Machine learning uses a model

- flexible structure, many parameters

- very powerful with Big Data

- often uninterpretable

- probalistic uncertainty is a work in progress

- lack of causal information is a problem

- cross-validation is limited by sample representativeness

Miscellaneous

Reporting Bayesian analyses

- The data

- methods, key observational uncertainties

- The model

- \(f\) function(s), \(x\) variables, \(\theta\) parameters

- Priors

- All parameters or a subset? What joint probability distribution did you assign? What information did you use to quantify it: literature, personal judgment, expert elicitation?

Reporting Bayesian analyses

- Likelihood function

- what likelihood function did you assign? How did you account for stochastic, systematic and representativeness errors?

- MCMC

- Which algorithm did you use? How many chains and iterations? How did you assess convergence?

Reporting Bayesian analyses

- Posterior distribution for the parameters

- What were posterior modes, means, variances and major correlations? How different was the posterior from the prior?

- Posterior predictions

- when using the posterior probability distribution for the parameters, how well did the model(a) reproduce data used in the calibration, (b) predict data not used in the calibration?

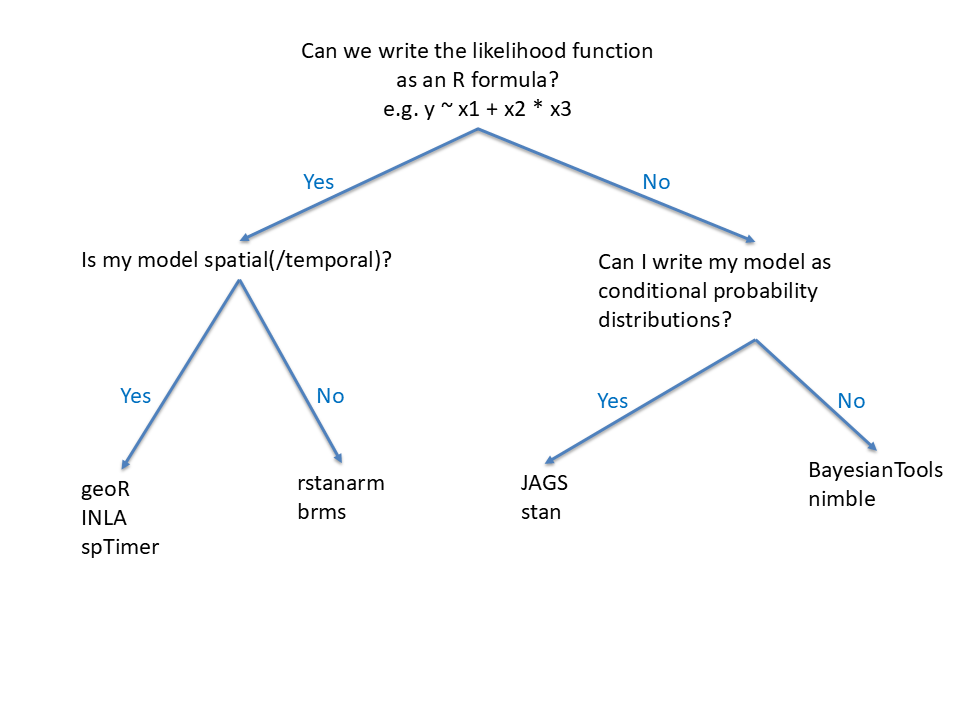

What software to use?

What software to use?

Resources

Course & book: Statistical Rethinking, Richard McElreath

Causal inference: Causal Inference in Statistics: A Primer, Judea Pearl et al.