Modelling non-Gaussian data

We will return to running generalised mixed-effects models (glmer) in rstanarm as we did in the first practical, this time considering non-Gaussian distributed-data, specified with the argument family.

Example 1: Roaches

First we examine a dataset with a count response variable, roaches (Gelman & Hill 2007), which is available in the rstanarm package and for which there is a vignette for the glm analysis. We will adapt this for our exercise to make this a hierarchical model using glmer.

The dataset is intended to explore the efficacy of roach pest management treatment. Our response \(y~{i}\) is the count of the number of roaches caught in traps, in apartments \(i\) . Either a treatment (1) or control (0) was applied to each apartment, and the variable roaches gives the number of roaches caught pre-treatment. There is also a variable indicating whether the apartment is in a building restricted to elderly residents:�senior. Because the number of days for which the roach traps were used is not the same for all apartments in the sample (i.e. there were different levels of opportunity to catch the roaches), we also include that as an ‘exposure’ term, and it can be specified using the�offset�argument to�stan_glm.

Following the vignette example, we rescale the roaches variable. We additionally generate and add a new grouping variable to denote clustered locations of the apartments: loc so that we can demonstrate a hierarchical model. Spatial clustering of apartments could have an effect if roach population sizes vary over space, (e.g. due to habitat or breeding success factors) thereby having an effect on the number of roaches caught beyond the experimental treatments we are trying to observe.

Warning: package 'ggplot2' was built under R version 4.4.3

library (bayesplot) # you may need to install this one!

This is bayesplot version 1.13.0

- Online documentation and vignettes at mc-stan.org/bayesplot

- bayesplot theme set to bayesplot::theme_default()

* Does _not_ affect other ggplot2 plots

* See ?bayesplot_theme_set for details on theme setting

Warning: package 'rstanarm' was built under R version 4.4.3

Loading required package: Rcpp

Warning: package 'Rcpp' was built under R version 4.4.3

This is rstanarm version 2.32.1

- See https://mc-stan.org/rstanarm/articles/priors for changes to default priors!

- Default priors may change, so it's safest to specify priors, even if equivalent to the defaults.

- For execution on a local, multicore CPU with excess RAM we recommend calling

options(mc.cores = parallel::detectCores())

data (roaches)head (roaches)

y roach1 treatment senior exposure2

1 153 308.00 1 0 0.800000

2 127 331.25 1 0 0.600000

3 7 1.67 1 0 1.000000

4 7 3.00 1 0 1.000000

5 0 2.00 1 0 1.142857

6 0 0.00 1 0 1.000000

# Rescale $ roach1 <- roaches$ roach1 / 100 # Randomly assign 'location' number as a new grouping term set.seed (2 ) # to ensure we get the same numbers each time <- sample (1 : 12 , size= 262 , replace= TRUE )

[1] 5 6 6 8 1 1 12 9 2 11 1 3 6 2 3 7 8 7 1 6 9 4 11 6 9

[26] 8 6 3 9 7 8 6 2 7 2 3 4 3 1 7 9 1 2 8 4 5 12 2 5 6

[51] 7 2 6 12 4 12 4 9 10 2 6 6 3 3 8 6 1 5 6 1 10 9 5 8 4

[76] 1 1 5 11 9 10 5 11 1 12 9 2 9 7 12 4 1 4 6 8 9 6 7 12 7

[101] 3 5 4 5 9 5 10 3 11 1 7 9 11 5 3 3 6 1 12 2 10 4 11 9 1

[126] 7 3 5 8 4 12 2 8 1 5 4 7 8 3 8 10 1 11 1 4 10 2 4 2 12

[151] 6 6 12 3 5 11 5 2 2 6 3 5 2 4 11 2 7 2 5 8 12 12 11 11 3

[176] 6 9 8 6 9 6 7 11 6 8 6 11 2 12 3 3 11 6 10 7 2 8 7 4 1

[201] 4 10 3 1 9 1 4 8 10 10 11 2 11 5 3 4 11 5 7 4 8 10 8 6 2

[226] 12 4 6 6 2 9 3 12 10 5 4 5 12 6 10 4 9 6 9 7 10 4 5 8 7

[251] 10 6 3 10 3 10 7 5 9 1 5 12

<- cbind (roaches, loc)head (roaches_new)

y roach1 treatment senior exposure2 loc

1 153 3.0800 1 0 0.800000 5

2 127 3.3125 1 0 0.600000 6

3 7 0.0167 1 0 1.000000 6

4 7 0.0300 1 0 1.000000 8

5 0 0.0200 1 0 1.142857 1

6 0 0.0000 1 0 1.000000 1

First, let’s run the models using the function glm (without the grouping term) and glmer (with the grouping term loc). Notice, in comparison to the first practical, we have specified the Poisson distribution using a log link function family = poisson(link = "log"), one of the families suitable for count data, but where there is quite a stringent assumption that the mean and variance are equal.

As with the example in the first practical, we can choose how to specify the random effects. Do we expect location to just shift the intercepts of the relationships between pre-treatment roaches and post-treatment roaches, or could the slopes of those relationships also vary across locations? We produce two versions of the glmer model. Other combinations could be possible, for example treatment efficacy having a different effect across locations.

Warning: package 'lme4' was built under R version 4.4.3

Loading required package: Matrix

# Estimate original model <- glm (y ~ roach1 + treatment + senior, offset = log (exposure2),data = roaches_new, family = poisson (link = "log" ))summary (glm1)

Call:

glm(formula = y ~ roach1 + treatment + senior, family = poisson(link = "log"),

data = roaches_new, offset = log(exposure2))

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.089246 0.021234 145.49 <2e-16 ***

roach1 0.698289 0.008874 78.69 <2e-16 ***

treatment -0.516726 0.024739 -20.89 <2e-16 ***

senior -0.379875 0.033418 -11.37 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for poisson family taken to be 1)

Null deviance: 16954 on 261 degrees of freedom

Residual deviance: 11429 on 258 degrees of freedom

AIC: 12192

Number of Fisher Scoring iterations: 6

# Estimate candidate mixed-effects models <- glmer (y ~ roach1 + treatment + senior + (1 | loc), offset = log (exposure2),data = roaches_new, family = poisson (link = "log" ))summary (glm2)

Generalized linear mixed model fit by maximum likelihood (Laplace

Approximation) [glmerMod]

Family: poisson ( log )

Formula: y ~ roach1 + treatment + senior + (1 | loc)

Data: roaches_new

Offset: log(exposure2)

AIC BIC logLik -2*log(L) df.resid

11646.4 11664.2 -5818.2 11636.4 257

Scaled residuals:

Min 1Q Median 3Q Max

-11.724 -3.885 -2.556 0.106 45.003

Random effects:

Groups Name Variance Std.Dev.

loc (Intercept) 0.1192 0.3453

Number of obs: 262, groups: loc, 12

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.024587 0.102076 29.63 <2e-16 ***

roach1 0.747000 0.009907 75.40 <2e-16 ***

treatment -0.534992 0.025625 -20.88 <2e-16 ***

senior -0.456364 0.034395 -13.27 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) roach1 trtmnt

roach1 -0.120

treatment -0.106 -0.050

senior -0.061 0.169 -0.124

$loc

(Intercept)

1 -0.228774670

2 -0.010197754

3 0.003867703

4 0.410392652

5 0.262560694

6 -0.004889566

7 -0.424295616

8 0.279652316

9 -0.720059821

10 0.397487779

11 -0.275254016

12 0.321418319

with conditional variances for "loc"

<- glmer (y ~ roach1 + treatment + senior + (roach1| loc), offset = log (exposure2),data = roaches_new, family = poisson (link = "log" ))summary (glm3)

Generalized linear mixed model fit by maximum likelihood (Laplace

Approximation) [glmerMod]

Family: poisson ( log )

Formula: y ~ roach1 + treatment + senior + (roach1 | loc)

Data: roaches_new

Offset: log(exposure2)

AIC BIC logLik -2*log(L) df.resid

11097.0 11122.0 -5541.5 11083.0 255

Scaled residuals:

Min 1Q Median 3Q Max

-10.591 -3.662 -2.624 0.753 44.072

Random effects:

Groups Name Variance Std.Dev. Corr

loc (Intercept) 0.1211 0.3480

roach1 0.0604 0.2458 -0.58

Number of obs: 262, groups: loc, 12

Fixed effects:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 2.91616 0.10347 28.18 <2e-16 ***

roach1 0.82682 0.07215 11.46 <2e-16 ***

treatment -0.48798 0.02823 -17.29 <2e-16 ***

senior -0.38332 0.03602 -10.64 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Correlation of Fixed Effects:

(Intr) roach1 trtmnt

roach1 -0.574

treatment -0.124 -0.006

senior -0.063 0.016 -0.124

$loc

(Intercept) roach1

1 -0.38402166 0.16363544

2 0.12793470 -0.11873824

3 -0.13447383 0.09681217

4 0.61808995 -0.33537557

5 0.16990675 0.06697628

6 0.15750920 -0.12989373

7 0.31700678 -0.39350123

8 0.35363777 -0.08569835

9 -0.48875228 -0.20979966

10 0.03441243 0.33278494

11 -0.49885510 0.24390528

12 -0.25279834 0.37263770

with conditional variances for "loc"

For simplicity, let’s proceed with model 2, this time using stan_glmer. The below code just uses the default settings for chains (4) and iterations (2000) because we have not explicitly stated them. What information could be used to help inform our priors?

# Estimate Bayesian version with stan_glm <- stan_glmer (y ~ roach1 + treatment + senior + (1 | loc), offset = log (exposure2),data = roaches_new, family = poisson (link = "log" ),prior = normal (0 , 2.5 ),prior_intercept = normal (0 , 5 ),seed = 12345 )

SAMPLING FOR MODEL 'count' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 0.000262 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 2.62 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 4.782 seconds (Warm-up)

Chain 1: 2.337 seconds (Sampling)

Chain 1: 7.119 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'count' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 6e-05 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 0.6 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 4.285 seconds (Warm-up)

Chain 2: 3.029 seconds (Sampling)

Chain 2: 7.314 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'count' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 6.1e-05 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 0.61 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 3.862 seconds (Warm-up)

Chain 3: 2.961 seconds (Sampling)

Chain 3: 6.823 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'count' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 5.8e-05 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 0.58 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 3.358 seconds (Warm-up)

Chain 4: 2.658 seconds (Sampling)

Chain 4: 6.016 seconds (Total)

Chain 4:

Priors for model 'stan_glm2'

------

Intercept (after predictors centered)

~ normal(location = 0, scale = 5)

Coefficients

~ normal(location = [0,0,0], scale = [2.5,2.5,2.5])

Covariance

~ decov(reg. = 1, conc. = 1, shape = 1, scale = 1)

------

See help('prior_summary.stanreg') for more details

Interpreting the model

$loc

(Intercept) roach1 treatment senior

1 2.795812 0.7470005 -0.5349923 -0.4563642

2 3.014389 0.7470005 -0.5349923 -0.4563642

3 3.028454 0.7470005 -0.5349923 -0.4563642

4 3.434979 0.7470005 -0.5349923 -0.4563642

5 3.287147 0.7470005 -0.5349923 -0.4563642

6 3.019697 0.7470005 -0.5349923 -0.4563642

7 2.600291 0.7470005 -0.5349923 -0.4563642

8 3.304239 0.7470005 -0.5349923 -0.4563642

9 2.304527 0.7470005 -0.5349923 -0.4563642

10 3.422074 0.7470005 -0.5349923 -0.4563642

11 2.749332 0.7470005 -0.5349923 -0.4563642

12 3.346005 0.7470005 -0.5349923 -0.4563642

attr(,"class")

[1] "coef.mer"

$loc

(Intercept) roach1 treatment senior

1 2.793090 0.7473234 -0.5336443 -0.4575336

2 3.012650 0.7473234 -0.5336443 -0.4575336

3 3.026102 0.7473234 -0.5336443 -0.4575336

4 3.433441 0.7473234 -0.5336443 -0.4575336

5 3.282963 0.7473234 -0.5336443 -0.4575336

6 3.017244 0.7473234 -0.5336443 -0.4575336

7 2.597886 0.7473234 -0.5336443 -0.4575336

8 3.303320 0.7473234 -0.5336443 -0.4575336

9 2.295207 0.7473234 -0.5336443 -0.4575336

10 3.417976 0.7473234 -0.5336443 -0.4575336

11 2.745987 0.7473234 -0.5336443 -0.4575336

12 3.344886 0.7473234 -0.5336443 -0.4575336

attr(,"class")

[1] "coef.mer"

print (stan_glm2) # for further information on interpretation: ?print.stanreg

stan_glmer

family: poisson [log]

formula: y ~ roach1 + treatment + senior + (1 | loc)

observations: 262

------

Median MAD_SD

(Intercept) 3.0 0.1

roach1 0.7 0.0

treatment -0.5 0.0

senior -0.5 0.0

Error terms:

Groups Name Std.Dev.

loc (Intercept) 0.41

Num. levels: loc 12

------

* For help interpreting the printed output see ?print.stanreg

* For info on the priors used see ?prior_summary.stanreg

summary (stan_glm2, digits = 5 )

Model Info:

function: stan_glmer

family: poisson [log]

formula: y ~ roach1 + treatment + senior + (1 | loc)

algorithm: sampling

sample: 4000 (posterior sample size)

priors: see help('prior_summary')

observations: 262

groups: loc (12)

Estimates:

mean sd 10% 50% 90%

(Intercept) 3.02760 0.12105 2.88069 3.02444 3.17537

roach1 0.74714 0.00969 0.73455 0.74732 0.75962

treatment -0.53433 0.02504 -0.56689 -0.53364 -0.50278

senior -0.45723 0.03482 -0.50208 -0.45753 -0.41175

b[(Intercept) loc:1] -0.23333 0.12765 -0.39094 -0.23135 -0.07800

b[(Intercept) loc:2] -0.01484 0.12411 -0.16746 -0.01179 0.13655

b[(Intercept) loc:3] -0.00110 0.12488 -0.15464 0.00166 0.14660

b[(Intercept) loc:4] 0.40655 0.12494 0.25540 0.40900 0.55636

b[(Intercept) loc:5] 0.25814 0.12555 0.10281 0.25852 0.41148

b[(Intercept) loc:6] -0.00833 0.12365 -0.15715 -0.00720 0.14336

b[(Intercept) loc:7] -0.42857 0.12637 -0.58643 -0.42655 -0.27513

b[(Intercept) loc:8] 0.27573 0.12521 0.12424 0.27888 0.42535

b[(Intercept) loc:9] -0.72949 0.13203 -0.89339 -0.72923 -0.56643

b[(Intercept) loc:10] 0.39339 0.12543 0.24198 0.39353 0.54486

b[(Intercept) loc:11] -0.28010 0.13118 -0.44270 -0.27845 -0.11774

b[(Intercept) loc:12] 0.31766 0.12500 0.16285 0.32045 0.46735

Sigma[loc:(Intercept),(Intercept)] 0.16604 0.08730 0.08493 0.14647 0.26780

Fit Diagnostics:

mean sd 10% 50% 90%

mean_PPD 25.65330 0.45286 25.06107 25.65649 26.23282

The mean_ppd is the sample average posterior predictive distribution of the outcome variable (for details see help('summary.stanreg')).

MCMC diagnostics

mcse Rhat n_eff

(Intercept) 0.00463 1.00397 684

roach1 0.00016 1.00080 3896

treatment 0.00043 0.99965 3380

senior 0.00067 1.00072 2686

b[(Intercept) loc:1] 0.00469 1.00363 741

b[(Intercept) loc:2] 0.00467 1.00350 706

b[(Intercept) loc:3] 0.00464 1.00316 725

b[(Intercept) loc:4] 0.00464 1.00331 726

b[(Intercept) loc:5] 0.00467 1.00441 724

b[(Intercept) loc:6] 0.00468 1.00343 699

b[(Intercept) loc:7] 0.00457 1.00303 764

b[(Intercept) loc:8] 0.00461 1.00301 737

b[(Intercept) loc:9] 0.00452 1.00242 855

b[(Intercept) loc:10] 0.00461 1.00416 741

b[(Intercept) loc:11] 0.00467 1.00325 788

b[(Intercept) loc:12] 0.00462 1.00374 733

Sigma[loc:(Intercept),(Intercept)] 0.00279 1.00489 976

mean_PPD 0.00724 0.99955 3909

log-posterior 0.14779 1.00788 668

For each parameter, mcse is Monte Carlo standard error, n_eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor on split chains (at convergence Rhat=1).

plot (stan_glm2, "trace" ) # nice hairy catepillars!

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

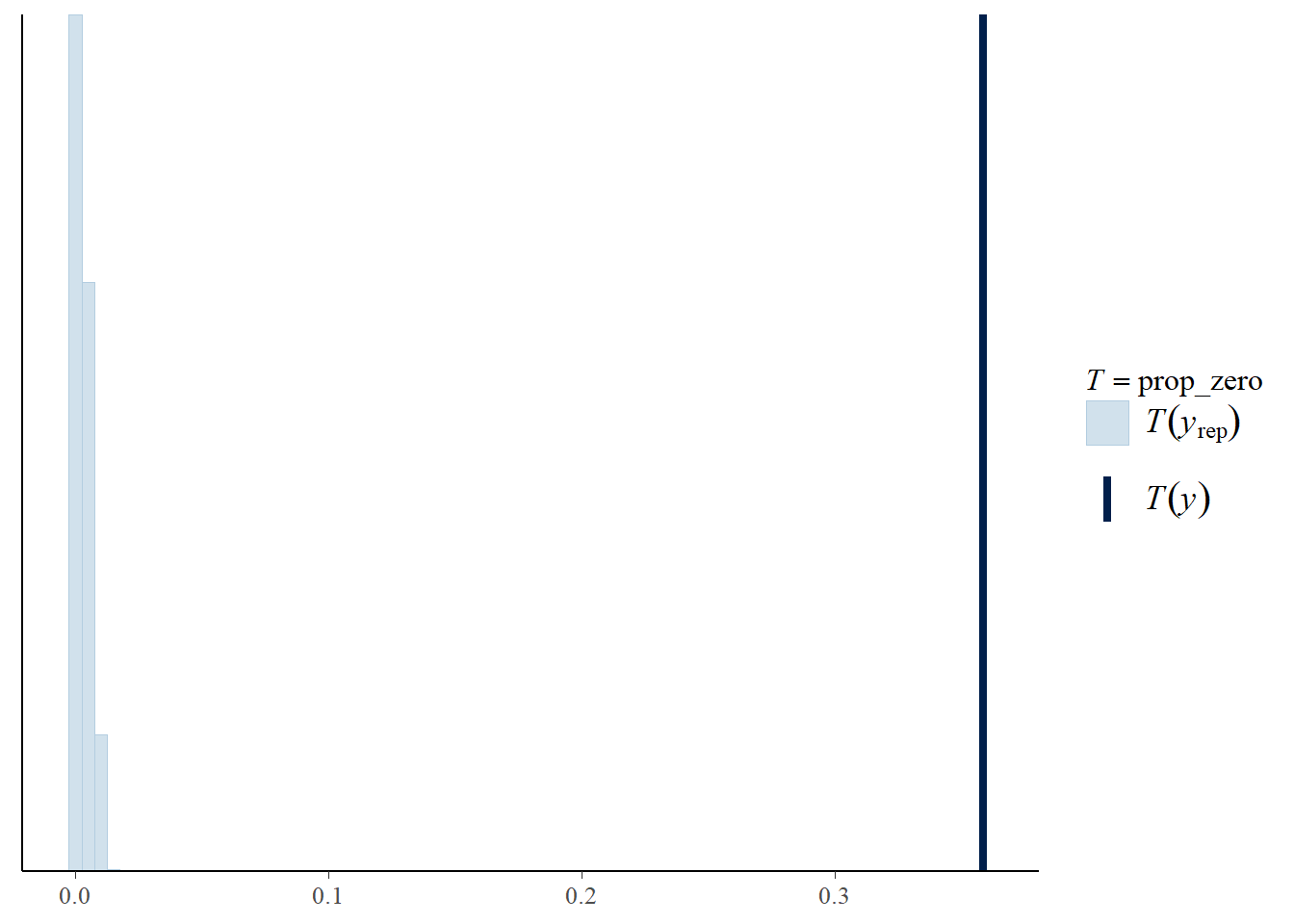

<- posterior_predict (stan_glm2)# yrep will be an S x N matrix, where S is the size of the posterior sample and N is the number of data points. Each row of yrep represents a full dataset generated from the posterior predictive distribution. dim (yrep)pp_check (stan_glm2) # this does not look great! Can you describe what is happening here? <- function (y) mean (y == 0 )<- pp_check (stan_glm2, plotfun = "stat" , stat = "prop_zero" , binwidth = .005 ))

The proportion of zeros computed from the sample�y�is the dark blue vertical line (>35% zeros) and the light blue bars are those from the replicated datasets from the model, what do you we think of our model?

We should consider a model that more accurately accounts for the large proportion of zeros in the data - one option is to use a negative binomial distribution for the data which is often used for zero-inflated or overdispersed count data. It is more flexible than Poisson because the (conditional) mean and variance of \(y\) can differ. We update the model with the new family, as follows:

<- update (stan_glm2, family = neg_binomial_2)

SAMPLING FOR MODEL 'count' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 0.000166 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 1.66 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 1: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 1: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 1: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 1: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 1: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 1: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 1: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 1: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 1: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 1: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 1: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 2.984 seconds (Warm-up)

Chain 1: 1.952 seconds (Sampling)

Chain 1: 4.936 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'count' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 0.000142 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 1.42 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 2: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 2: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 2: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 2: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 2: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 2: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 2: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 2: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 2: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 2: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 2: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 3.982 seconds (Warm-up)

Chain 2: 2.566 seconds (Sampling)

Chain 2: 6.548 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'count' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 0.000449 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 4.49 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 3: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 3: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 3: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 3: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 3: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 3: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 3: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 3: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 3: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 3: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 3: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 3.786 seconds (Warm-up)

Chain 3: 2.826 seconds (Sampling)

Chain 3: 6.612 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'count' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 0.000144 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 1.44 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2000 [ 0%] (Warmup)

Chain 4: Iteration: 200 / 2000 [ 10%] (Warmup)

Chain 4: Iteration: 400 / 2000 [ 20%] (Warmup)

Chain 4: Iteration: 600 / 2000 [ 30%] (Warmup)

Chain 4: Iteration: 800 / 2000 [ 40%] (Warmup)

Chain 4: Iteration: 1000 / 2000 [ 50%] (Warmup)

Chain 4: Iteration: 1001 / 2000 [ 50%] (Sampling)

Chain 4: Iteration: 1200 / 2000 [ 60%] (Sampling)

Chain 4: Iteration: 1400 / 2000 [ 70%] (Sampling)

Chain 4: Iteration: 1600 / 2000 [ 80%] (Sampling)

Chain 4: Iteration: 1800 / 2000 [ 90%] (Sampling)

Chain 4: Iteration: 2000 / 2000 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 4.071 seconds (Warm-up)

Chain 4: 3.719 seconds (Sampling)

Chain 4: 7.79 seconds (Total)

Chain 4:

<- pp_check (stan_glm_nb, plotfun = "stat" , stat = "prop_zero" ,binwidth = 0.01 )prior_summary (stan_glm_nb)

Priors for model 'stan_glm_nb'

------

Intercept (after predictors centered)

~ normal(location = 0, scale = 5)

Coefficients

~ normal(location = [0,0,0], scale = [2.5,2.5,2.5])

Auxiliary (reciprocal_dispersion)

~ exponential(rate = 1)

Covariance

~ decov(reg. = 1, conc. = 1, shape = 1, scale = 1)

------

See help('prior_summary.stanreg') for more details

$loc

(Intercept) roach1 treatment senior

1 2.849141 1.314065 -0.7997935 -0.4109909

2 2.853071 1.314065 -0.7997935 -0.4109909

3 2.834623 1.314065 -0.7997935 -0.4109909

4 3.000969 1.314065 -0.7997935 -0.4109909

5 2.883617 1.314065 -0.7997935 -0.4109909

6 2.867341 1.314065 -0.7997935 -0.4109909

7 2.853989 1.314065 -0.7997935 -0.4109909

8 2.875434 1.314065 -0.7997935 -0.4109909

9 2.805406 1.314065 -0.7997935 -0.4109909

10 2.877205 1.314065 -0.7997935 -0.4109909

11 2.823158 1.314065 -0.7997935 -0.4109909

12 2.862583 1.314065 -0.7997935 -0.4109909

attr(,"class")

[1] "coef.mer"

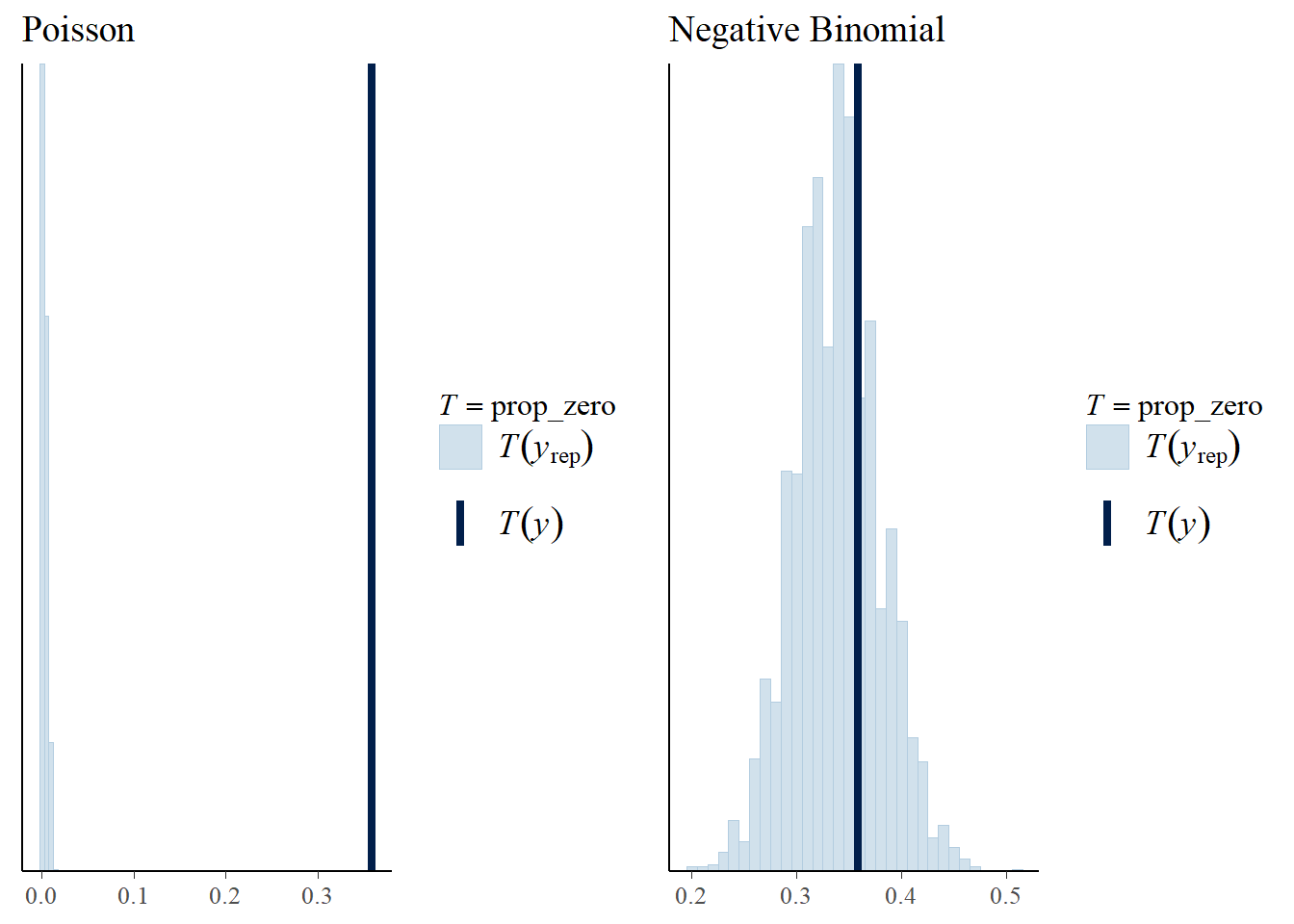

# Show graphs for Poisson and negative binomial side by side - we use this function from the bayesplot package bayesplot_grid (prop_zero_test1 + ggtitle ("Poisson" ),+ ggtitle ("Negative Binomial" ),grid_args = list (ncol = 2 ))

We can see clearly that the updated model is doing a better job. At this point

Example 2: Climbing expeditions (…or other success/survival outcomes)

For this part of the practical we make use of an example from Johnson, Ott and Dogucu (2021), which presents a subset of The Himalayan Database (2020) https://www.himalayandatabase.com/ .

The climbers.csv represents the outcomes (success of reaching the destination) from 200 climbing expeditions. Each row represents a climber (member_id) and their individual success outcome (true or false) for a given expedition_id. The other predictors of outcomes include climber age, season, expedition_role and oxygen_used. expedition_id groups members across the dataset, because each expedition has multiple climbers - this grouping variable can be considered structure within the dataset, because members within the team share similar conditions (same weather, same destination, same instructors.

Let’s read in the data and visually explore.

Warning: package 'bayesrules' was built under R version 4.4.3

<- read.csv (url ("https://raw.githubusercontent.com/NERC-CEH/beem_data/main/climbers.csv" ))head (climbers)

X expedition_id member_id success year season age expedition_role

1 1 AMAD81101 AMAD81101-03 TRUE 1981 Spring 28 Climber

2 2 AMAD81101 AMAD81101-04 TRUE 1981 Spring 27 Exp Doctor

3 3 AMAD81101 AMAD81101-02 TRUE 1981 Spring 35 Deputy Leader

4 4 AMAD81101 AMAD81101-05 TRUE 1981 Spring 37 Climber

5 5 AMAD81101 AMAD81101-06 TRUE 1981 Spring 43 Climber

6 6 AMAD81101 AMAD81101-07 FALSE 1981 Spring 38 Climber

oxygen_used

1 FALSE

2 FALSE

3 FALSE

4 FALSE

5 FALSE

6 FALSE

Warning: package 'tidyverse' was built under R version 4.4.3

Warning: package 'tibble' was built under R version 4.4.3

Warning: package 'tidyr' was built under R version 4.4.3

Warning: package 'readr' was built under R version 4.4.3

Warning: package 'purrr' was built under R version 4.4.3

Warning: package 'dplyr' was built under R version 4.4.3

Warning: package 'stringr' was built under R version 4.4.3

Warning: package 'forcats' was built under R version 4.4.3

Warning: package 'lubridate' was built under R version 4.4.3

── Attaching core tidyverse packages ──────────────────────── tidyverse 2.0.0 ──

✔ dplyr 1.1.4 ✔ readr 2.1.5

✔ forcats 1.0.0 ✔ stringr 1.5.1

✔ lubridate 1.9.4 ✔ tibble 3.3.0

✔ purrr 1.0.4 ✔ tidyr 1.3.1

── Conflicts ────────────────────────────────────────── tidyverse_conflicts() ──

✖ tidyr::expand() masks Matrix::expand()

✖ dplyr::filter() masks stats::filter()

✖ dplyr::lag() masks stats::lag()

✖ tidyr::pack() masks Matrix::pack()

✖ tidyr::unpack() masks Matrix::unpack()

ℹ Use the conflicted package (<http://conflicted.r-lib.org/>) to force all conflicts to become errors

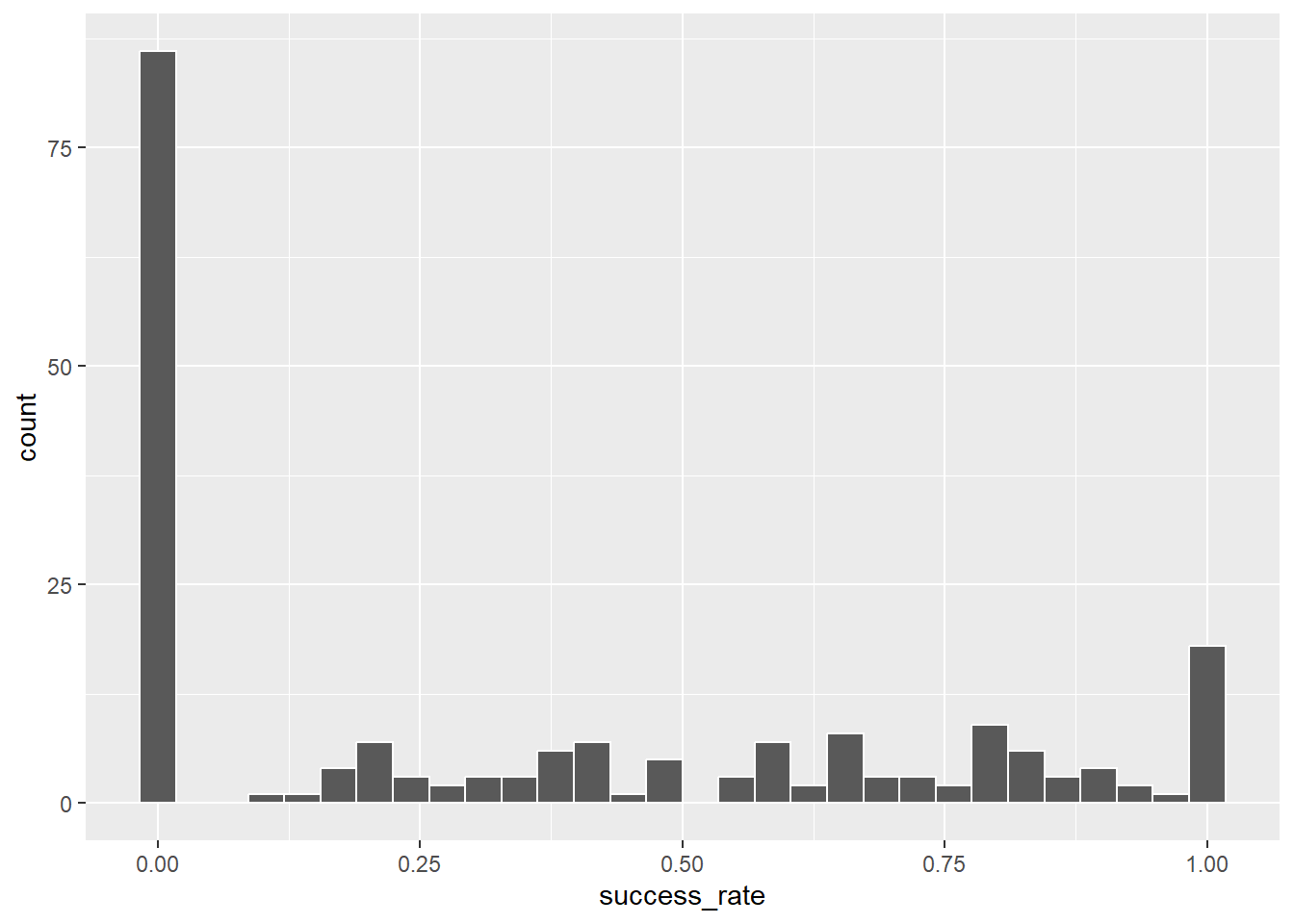

<- climbers %>% group_by (expedition_id) %>% summarise (success_rate= mean (success))ggplot (expedition_success, aes (x= success_rate))+ geom_histogram (color= "white" )

`stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

What type of distribution would be appropriate for modelling the outcomes?

<- stan_glmer (~ age + oxygen_used + (1 | expedition_id), data = climbers, family = binomial,prior_intercept = normal (0 , 2.5 , autoscale = TRUE ),prior = normal (0 , 2.5 , autoscale = TRUE ), prior_covariance = decov (reg = 1 , conc = 1 , shape = 1 , scale = 1 ),chains = 4 , iter = 2500 , seed = 84735

SAMPLING FOR MODEL 'bernoulli' NOW (CHAIN 1).

Chain 1:

Chain 1: Gradient evaluation took 0.001143 seconds

Chain 1: 1000 transitions using 10 leapfrog steps per transition would take 11.43 seconds.

Chain 1: Adjust your expectations accordingly!

Chain 1:

Chain 1:

Chain 1: Iteration: 1 / 2500 [ 0%] (Warmup)

Chain 1: Iteration: 250 / 2500 [ 10%] (Warmup)

Chain 1: Iteration: 500 / 2500 [ 20%] (Warmup)

Chain 1: Iteration: 750 / 2500 [ 30%] (Warmup)

Chain 1: Iteration: 1000 / 2500 [ 40%] (Warmup)

Chain 1: Iteration: 1250 / 2500 [ 50%] (Warmup)

Chain 1: Iteration: 1251 / 2500 [ 50%] (Sampling)

Chain 1: Iteration: 1500 / 2500 [ 60%] (Sampling)

Chain 1: Iteration: 1750 / 2500 [ 70%] (Sampling)

Chain 1: Iteration: 2000 / 2500 [ 80%] (Sampling)

Chain 1: Iteration: 2250 / 2500 [ 90%] (Sampling)

Chain 1: Iteration: 2500 / 2500 [100%] (Sampling)

Chain 1:

Chain 1: Elapsed Time: 39.975 seconds (Warm-up)

Chain 1: 30.979 seconds (Sampling)

Chain 1: 70.954 seconds (Total)

Chain 1:

SAMPLING FOR MODEL 'bernoulli' NOW (CHAIN 2).

Chain 2:

Chain 2: Gradient evaluation took 0.000694 seconds

Chain 2: 1000 transitions using 10 leapfrog steps per transition would take 6.94 seconds.

Chain 2: Adjust your expectations accordingly!

Chain 2:

Chain 2:

Chain 2: Iteration: 1 / 2500 [ 0%] (Warmup)

Chain 2: Iteration: 250 / 2500 [ 10%] (Warmup)

Chain 2: Iteration: 500 / 2500 [ 20%] (Warmup)

Chain 2: Iteration: 750 / 2500 [ 30%] (Warmup)

Chain 2: Iteration: 1000 / 2500 [ 40%] (Warmup)

Chain 2: Iteration: 1250 / 2500 [ 50%] (Warmup)

Chain 2: Iteration: 1251 / 2500 [ 50%] (Sampling)

Chain 2: Iteration: 1500 / 2500 [ 60%] (Sampling)

Chain 2: Iteration: 1750 / 2500 [ 70%] (Sampling)

Chain 2: Iteration: 2000 / 2500 [ 80%] (Sampling)

Chain 2: Iteration: 2250 / 2500 [ 90%] (Sampling)

Chain 2: Iteration: 2500 / 2500 [100%] (Sampling)

Chain 2:

Chain 2: Elapsed Time: 38.765 seconds (Warm-up)

Chain 2: 26.572 seconds (Sampling)

Chain 2: 65.337 seconds (Total)

Chain 2:

SAMPLING FOR MODEL 'bernoulli' NOW (CHAIN 3).

Chain 3:

Chain 3: Gradient evaluation took 0.000668 seconds

Chain 3: 1000 transitions using 10 leapfrog steps per transition would take 6.68 seconds.

Chain 3: Adjust your expectations accordingly!

Chain 3:

Chain 3:

Chain 3: Iteration: 1 / 2500 [ 0%] (Warmup)

Chain 3: Iteration: 250 / 2500 [ 10%] (Warmup)

Chain 3: Iteration: 500 / 2500 [ 20%] (Warmup)

Chain 3: Iteration: 750 / 2500 [ 30%] (Warmup)

Chain 3: Iteration: 1000 / 2500 [ 40%] (Warmup)

Chain 3: Iteration: 1250 / 2500 [ 50%] (Warmup)

Chain 3: Iteration: 1251 / 2500 [ 50%] (Sampling)

Chain 3: Iteration: 1500 / 2500 [ 60%] (Sampling)

Chain 3: Iteration: 1750 / 2500 [ 70%] (Sampling)

Chain 3: Iteration: 2000 / 2500 [ 80%] (Sampling)

Chain 3: Iteration: 2250 / 2500 [ 90%] (Sampling)

Chain 3: Iteration: 2500 / 2500 [100%] (Sampling)

Chain 3:

Chain 3: Elapsed Time: 35.565 seconds (Warm-up)

Chain 3: 24.82 seconds (Sampling)

Chain 3: 60.385 seconds (Total)

Chain 3:

SAMPLING FOR MODEL 'bernoulli' NOW (CHAIN 4).

Chain 4:

Chain 4: Gradient evaluation took 0.000662 seconds

Chain 4: 1000 transitions using 10 leapfrog steps per transition would take 6.62 seconds.

Chain 4: Adjust your expectations accordingly!

Chain 4:

Chain 4:

Chain 4: Iteration: 1 / 2500 [ 0%] (Warmup)

Chain 4: Iteration: 250 / 2500 [ 10%] (Warmup)

Chain 4: Iteration: 500 / 2500 [ 20%] (Warmup)

Chain 4: Iteration: 750 / 2500 [ 30%] (Warmup)

Chain 4: Iteration: 1000 / 2500 [ 40%] (Warmup)

Chain 4: Iteration: 1250 / 2500 [ 50%] (Warmup)

Chain 4: Iteration: 1251 / 2500 [ 50%] (Sampling)

Chain 4: Iteration: 1500 / 2500 [ 60%] (Sampling)

Chain 4: Iteration: 1750 / 2500 [ 70%] (Sampling)

Chain 4: Iteration: 2000 / 2500 [ 80%] (Sampling)

Chain 4: Iteration: 2250 / 2500 [ 90%] (Sampling)

Chain 4: Iteration: 2500 / 2500 [100%] (Sampling)

Chain 4:

Chain 4: Elapsed Time: 40.447 seconds (Warm-up)

Chain 4: 25.591 seconds (Sampling)

Chain 4: 66.038 seconds (Total)

Chain 4:

Let’s check our model. Some of the outputs are quite long because our population of random effects is quite large.

library (bayesplot)rhat (climb_stan_glm1) # ?rhat for further details

(Intercept)

1.0005532

age

0.9995728

oxygen_usedTRUE

1.0003627

b[(Intercept) expedition_id:AMAD03107]

0.9997726

b[(Intercept) expedition_id:AMAD03327]

0.9998950

b[(Intercept) expedition_id:AMAD05338]

1.0000518

b[(Intercept) expedition_id:AMAD06110]

0.9997008

b[(Intercept) expedition_id:AMAD06334]

0.9996629

b[(Intercept) expedition_id:AMAD07101]

0.9998991

b[(Intercept) expedition_id:AMAD07310]

1.0002853

b[(Intercept) expedition_id:AMAD07337]

1.0003666

b[(Intercept) expedition_id:AMAD07340]

0.9999155

b[(Intercept) expedition_id:AMAD09331]

0.9996659

b[(Intercept) expedition_id:AMAD09333]

0.9994012

b[(Intercept) expedition_id:AMAD11304]

0.9997037

b[(Intercept) expedition_id:AMAD11321]

0.9998430

b[(Intercept) expedition_id:AMAD11340]

1.0003190

b[(Intercept) expedition_id:AMAD12108]

0.9995780

b[(Intercept) expedition_id:AMAD12342]

1.0004821

b[(Intercept) expedition_id:AMAD14105]

0.9998667

b[(Intercept) expedition_id:AMAD14322]

0.9996353

b[(Intercept) expedition_id:AMAD16354]

0.9994518

b[(Intercept) expedition_id:AMAD17307]

1.0001298

b[(Intercept) expedition_id:AMAD81101]

1.0003451

b[(Intercept) expedition_id:AMAD84401]

0.9997012

b[(Intercept) expedition_id:AMAD89102]

0.9995925

b[(Intercept) expedition_id:AMAD93304]

0.9999750

b[(Intercept) expedition_id:AMAD98311]

0.9999970

b[(Intercept) expedition_id:AMAD98316]

1.0007296

b[(Intercept) expedition_id:AMAD98402]

0.9994348

b[(Intercept) expedition_id:ANN115106]

0.9998717

b[(Intercept) expedition_id:ANN115108]

1.0000472

b[(Intercept) expedition_id:ANN179301]

0.9994432

b[(Intercept) expedition_id:ANN185401]

0.9995715

b[(Intercept) expedition_id:ANN190101]

0.9996483

b[(Intercept) expedition_id:ANN193302]

1.0002134

b[(Intercept) expedition_id:ANN281302]

0.9995369

b[(Intercept) expedition_id:ANN283302]

0.9995802

b[(Intercept) expedition_id:ANN378301]

1.0005540

b[(Intercept) expedition_id:ANN402302]

0.9994685

b[(Intercept) expedition_id:ANN405301]

0.9998415

b[(Intercept) expedition_id:ANN409201]

1.0005906

b[(Intercept) expedition_id:ANN416101]

0.9995834

b[(Intercept) expedition_id:BARU01304]

1.0007201

b[(Intercept) expedition_id:BARU02101]

1.0001295

b[(Intercept) expedition_id:BARU02303]

0.9997006

b[(Intercept) expedition_id:BARU09102]

0.9999938

b[(Intercept) expedition_id:BARU10312]

0.9999667

b[(Intercept) expedition_id:BARU11303]

1.0006530

b[(Intercept) expedition_id:BARU92301]

1.0004625

b[(Intercept) expedition_id:BARU94301]

1.0007040

b[(Intercept) expedition_id:BARU94307]

0.9997854

b[(Intercept) expedition_id:BHRI86101]

0.9996264

b[(Intercept) expedition_id:CHAM18101]

0.9995013

b[(Intercept) expedition_id:CHAM91301]

1.0000014

b[(Intercept) expedition_id:CHOY02117]

1.0001804

b[(Intercept) expedition_id:CHOY03103]

1.0011492

b[(Intercept) expedition_id:CHOY03318]

1.0008072

b[(Intercept) expedition_id:CHOY04122]

0.9997353

b[(Intercept) expedition_id:CHOY04302]

1.0003967

b[(Intercept) expedition_id:CHOY06330]

1.0001162

b[(Intercept) expedition_id:CHOY07310]

1.0000940

b[(Intercept) expedition_id:CHOY07330]

0.9997409

b[(Intercept) expedition_id:CHOY07356]

0.9997544

b[(Intercept) expedition_id:CHOY07358]

0.9997619

b[(Intercept) expedition_id:CHOY10328]

0.9997056

b[(Intercept) expedition_id:CHOY10341]

0.9998975

b[(Intercept) expedition_id:CHOY93304]

0.9999339

b[(Intercept) expedition_id:CHOY96319]

1.0001085

b[(Intercept) expedition_id:CHOY97103]

1.0007538

b[(Intercept) expedition_id:CHOY98307]

0.9996961

b[(Intercept) expedition_id:CHOY99301]

1.0000512

b[(Intercept) expedition_id:DHA109113]

0.9996487

b[(Intercept) expedition_id:DHA116106]

0.9999480

b[(Intercept) expedition_id:DHA183302]

0.9999637

b[(Intercept) expedition_id:DHA193302]

0.9995715

b[(Intercept) expedition_id:DHA196104]

1.0000891

b[(Intercept) expedition_id:DHA197107]

0.9995235

b[(Intercept) expedition_id:DHA197307]

1.0005591

b[(Intercept) expedition_id:DHA198107]

0.9997476

b[(Intercept) expedition_id:DHA198302]

0.9997488

b[(Intercept) expedition_id:DHAM88301]

0.9999669

b[(Intercept) expedition_id:DZA215301]

1.0000358

b[(Intercept) expedition_id:EVER00116]

0.9996641

b[(Intercept) expedition_id:EVER00148]

0.9992599

b[(Intercept) expedition_id:EVER01108]

1.0005965

b[(Intercept) expedition_id:EVER02112]

0.9996763

b[(Intercept) expedition_id:EVER03124]

1.0001600

b[(Intercept) expedition_id:EVER03168]

1.0012481

b[(Intercept) expedition_id:EVER04150]

1.0003185

b[(Intercept) expedition_id:EVER04156]

0.9996000

b[(Intercept) expedition_id:EVER06133]

0.9998157

b[(Intercept) expedition_id:EVER06136]

1.0007162

b[(Intercept) expedition_id:EVER06152]

1.0001397

b[(Intercept) expedition_id:EVER06163]

1.0004249

b[(Intercept) expedition_id:EVER07107]

0.9998612

b[(Intercept) expedition_id:EVER07148]

0.9995514

b[(Intercept) expedition_id:EVER07175]

1.0012568

b[(Intercept) expedition_id:EVER07192]

1.0000522

b[(Intercept) expedition_id:EVER07194]

1.0001655

b[(Intercept) expedition_id:EVER08139]

1.0012186

b[(Intercept) expedition_id:EVER08152]

1.0009996

b[(Intercept) expedition_id:EVER08154]

0.9996120

b[(Intercept) expedition_id:EVER10121]

0.9995880

b[(Intercept) expedition_id:EVER10136]

0.9997021

b[(Intercept) expedition_id:EVER10154]

1.0018716

b[(Intercept) expedition_id:EVER11103]

1.0010148

b[(Intercept) expedition_id:EVER11134]

0.9997410

b[(Intercept) expedition_id:EVER11144]

0.9999872

b[(Intercept) expedition_id:EVER11152]

1.0000995

b[(Intercept) expedition_id:EVER12133]

0.9997449

b[(Intercept) expedition_id:EVER12141]

0.9996518

b[(Intercept) expedition_id:EVER14138]

0.9994947

b[(Intercept) expedition_id:EVER14165]

1.0000990

b[(Intercept) expedition_id:EVER15117]

0.9997793

b[(Intercept) expedition_id:EVER15123]

0.9996767

b[(Intercept) expedition_id:EVER16105]

1.0016690

b[(Intercept) expedition_id:EVER17123]

1.0016099

b[(Intercept) expedition_id:EVER17137]

0.9997361

b[(Intercept) expedition_id:EVER18118]

0.9995584

b[(Intercept) expedition_id:EVER18132]

0.9996551

b[(Intercept) expedition_id:EVER19104]

0.9993636

b[(Intercept) expedition_id:EVER19112]

1.0004859

b[(Intercept) expedition_id:EVER19120]

1.0001846

b[(Intercept) expedition_id:EVER81101]

0.9994650

b[(Intercept) expedition_id:EVER86101]

1.0004059

b[(Intercept) expedition_id:EVER89304]

0.9995315

b[(Intercept) expedition_id:EVER91108]

0.9998226

b[(Intercept) expedition_id:EVER96302]

1.0000293

b[(Intercept) expedition_id:EVER97105]

1.0006378

b[(Intercept) expedition_id:EVER98116]

1.0002699

b[(Intercept) expedition_id:EVER99107]

0.9993799

b[(Intercept) expedition_id:EVER99113]

1.0002634

b[(Intercept) expedition_id:EVER99116]

0.9995102

b[(Intercept) expedition_id:FANG97301]

0.9998397

b[(Intercept) expedition_id:GAN379301]

1.0004571

b[(Intercept) expedition_id:GAUR83101]

0.9998868

b[(Intercept) expedition_id:GURK03301]

1.0010562

b[(Intercept) expedition_id:GYAJ15101]

0.9999490

b[(Intercept) expedition_id:HIML03302]

0.9998998

b[(Intercept) expedition_id:HIML08301]

0.9998365

b[(Intercept) expedition_id:HIML08307]

0.9994337

b[(Intercept) expedition_id:HIML13303]

1.0003050

b[(Intercept) expedition_id:HIML13305]

0.9997714

b[(Intercept) expedition_id:HIML18310]

0.9995565

b[(Intercept) expedition_id:JANU02401]

0.9997431

b[(Intercept) expedition_id:JANU89401]

0.9996309

b[(Intercept) expedition_id:JANU97301]

0.9994237

b[(Intercept) expedition_id:JANU98102]

0.9999609

b[(Intercept) expedition_id:JETH78101]

0.9995684

b[(Intercept) expedition_id:KABD85101]

0.9997887

b[(Intercept) expedition_id:KAN180101]

0.9994865

b[(Intercept) expedition_id:KANG81101]

1.0000984

b[(Intercept) expedition_id:KANG94301]

1.0004965

b[(Intercept) expedition_id:KWAN82402]

0.9996882

b[(Intercept) expedition_id:LANG84101]

0.9996592

b[(Intercept) expedition_id:LEOE05301]

0.9994956

b[(Intercept) expedition_id:LHOT09108]

0.9999177

b[(Intercept) expedition_id:LHOT88301]

0.9996417

b[(Intercept) expedition_id:LNJU16301]

0.9997315

b[(Intercept) expedition_id:LSHR90301]

1.0004738

b[(Intercept) expedition_id:MAKA01104]

0.9997320

b[(Intercept) expedition_id:MAKA02102]

1.0005283

b[(Intercept) expedition_id:MAKA02103]

0.9996149

b[(Intercept) expedition_id:MAKA08112]

1.0013837

b[(Intercept) expedition_id:MAKA09108]

0.9998132

b[(Intercept) expedition_id:MAKA15106]

1.0003040

b[(Intercept) expedition_id:MAKA90306]

1.0005162

b[(Intercept) expedition_id:MANA06305]

1.0002866

b[(Intercept) expedition_id:MANA08324]

1.0004055

b[(Intercept) expedition_id:MANA09107]

1.0005475

b[(Intercept) expedition_id:MANA10319]

0.9997260

b[(Intercept) expedition_id:MANA11106]

0.9996387

b[(Intercept) expedition_id:MANA11318]

0.9994870

b[(Intercept) expedition_id:MANA17333]

0.9998609

b[(Intercept) expedition_id:MANA18306]

1.0006496

b[(Intercept) expedition_id:MANA80101]

0.9999937

b[(Intercept) expedition_id:MANA82401]

0.9997594

b[(Intercept) expedition_id:MANA84101]

1.0007098

b[(Intercept) expedition_id:MANA91301]

1.0002649

b[(Intercept) expedition_id:MANA97301]

1.0002763

b[(Intercept) expedition_id:NAMP79101]

0.9999770

b[(Intercept) expedition_id:NEMJ83101]

0.9995502

b[(Intercept) expedition_id:NILC79101]

0.9998732

b[(Intercept) expedition_id:NUPT99102]

0.9994055

b[(Intercept) expedition_id:OHMI89101]

1.0004379

b[(Intercept) expedition_id:PUMO06301]

0.9998749

b[(Intercept) expedition_id:PUMO80302]

1.0008513

b[(Intercept) expedition_id:PUMO92308]

0.9994772

b[(Intercept) expedition_id:PUMO96101]

1.0000502

b[(Intercept) expedition_id:PUTH02301]

0.9998146

b[(Intercept) expedition_id:PUTH97302]

0.9997939

b[(Intercept) expedition_id:SHAL05301]

0.9995011

b[(Intercept) expedition_id:SPHN93101]

0.9996239

b[(Intercept) expedition_id:SYKG12301]

0.9997353

b[(Intercept) expedition_id:TAWO83101]

0.9995015

b[(Intercept) expedition_id:TILI04301]

1.0003972

b[(Intercept) expedition_id:TILI05301]

1.0010002

b[(Intercept) expedition_id:TILI91302]

0.9999817

b[(Intercept) expedition_id:TILI92301]

0.9996376

b[(Intercept) expedition_id:TKPO02102]

1.0009627

b[(Intercept) expedition_id:TUKU05101]

0.9996587

b[(Intercept) expedition_id:TUKU16301]

0.9999838

Sigma[expedition_id:(Intercept),(Intercept)]

1.0012913

stan_glmer

family: binomial [logit]

formula: success ~ age + oxygen_used + (1 | expedition_id)

observations: 2076

------

Median MAD_SD

(Intercept) -1.4 0.5

age 0.0 0.0

oxygen_usedTRUE 5.8 0.5

Error terms:

Groups Name Std.Dev.

expedition_id (Intercept) 3.7

Num. levels: expedition_id 200

------

* For help interpreting the printed output see ?print.stanreg

* For info on the priors used see ?prior_summary.stanreg

summary (climb_stan_glm1, digits = 5 )

Model Info:

function: stan_glmer

family: binomial [logit]

formula: success ~ age + oxygen_used + (1 | expedition_id)

algorithm: sampling

sample: 5000 (posterior sample size)

priors: see help('prior_summary')

observations: 2076

groups: expedition_id (200)

Estimates:

mean sd 10%

(Intercept) -1.42560 0.48108 -2.03863

age -0.04774 0.00926 -0.05941

oxygen_usedTRUE 5.81435 0.48572 5.19214

b[(Intercept) expedition_id:AMAD03107] 2.58462 1.05527 1.22886

b[(Intercept) expedition_id:AMAD03327] 6.09344 1.86907 4.00374

b[(Intercept) expedition_id:AMAD05338] 4.12540 0.74295 3.18067

b[(Intercept) expedition_id:AMAD06110] -2.12498 2.63546 -5.76218

b[(Intercept) expedition_id:AMAD06334] -2.66437 2.43445 -6.03105

b[(Intercept) expedition_id:AMAD07101] -2.59019 2.47853 -6.07242

b[(Intercept) expedition_id:AMAD07310] 4.99448 0.73104 4.07581

b[(Intercept) expedition_id:AMAD07337] 2.91814 0.90162 1.77134

b[(Intercept) expedition_id:AMAD07340] 3.00031 0.83291 1.94906

b[(Intercept) expedition_id:AMAD09331] 3.73837 1.04516 2.43805

b[(Intercept) expedition_id:AMAD09333] 3.38418 0.97764 2.17328

b[(Intercept) expedition_id:AMAD11304] 3.94162 1.04654 2.62305

b[(Intercept) expedition_id:AMAD11321] 6.68067 1.76248 4.63832

b[(Intercept) expedition_id:AMAD11340] 6.27161 1.83718 4.20223

b[(Intercept) expedition_id:AMAD12108] 4.53617 1.20439 3.12452

b[(Intercept) expedition_id:AMAD12342] 4.82718 1.09813 3.54095

b[(Intercept) expedition_id:AMAD14105] -2.14392 2.57195 -5.62741

b[(Intercept) expedition_id:AMAD14322] 2.60047 0.75703 1.61986

b[(Intercept) expedition_id:AMAD16354] 4.15790 1.00258 2.88666

b[(Intercept) expedition_id:AMAD17307] 2.35569 0.99396 1.07838

b[(Intercept) expedition_id:AMAD81101] 4.56264 0.84324 3.50710

b[(Intercept) expedition_id:AMAD84401] -2.47170 2.55421 -5.90571

b[(Intercept) expedition_id:AMAD89102] -2.23811 2.48509 -5.59346

b[(Intercept) expedition_id:AMAD93304] 3.01674 0.94991 1.82339

b[(Intercept) expedition_id:AMAD98311] 6.60274 1.78207 4.58420

b[(Intercept) expedition_id:AMAD98316] 3.41252 0.68715 2.54421

b[(Intercept) expedition_id:AMAD98402] 4.29294 1.18256 2.88351

b[(Intercept) expedition_id:ANN115106] -2.62241 2.43068 -5.91960

b[(Intercept) expedition_id:ANN115108] -2.51791 2.43742 -5.85671

b[(Intercept) expedition_id:ANN179301] -2.50727 2.47726 -5.90908

b[(Intercept) expedition_id:ANN185401] -2.95374 2.36391 -6.18820

b[(Intercept) expedition_id:ANN190101] -2.51231 2.46417 -5.94034

b[(Intercept) expedition_id:ANN193302] -2.72987 2.45709 -6.12101

b[(Intercept) expedition_id:ANN281302] -2.92771 2.31649 -6.05400

b[(Intercept) expedition_id:ANN283302] -2.41458 2.43588 -5.68305

b[(Intercept) expedition_id:ANN378301] -2.96780 2.25677 -6.05808

b[(Intercept) expedition_id:ANN402302] -2.26993 2.58118 -5.72633

b[(Intercept) expedition_id:ANN405301] -1.85081 2.67077 -5.48998

b[(Intercept) expedition_id:ANN409201] 6.52670 1.82761 4.44457

b[(Intercept) expedition_id:ANN416101] -2.22586 2.64460 -5.80876

b[(Intercept) expedition_id:BARU01304] -2.38045 2.56391 -5.92191

b[(Intercept) expedition_id:BARU02101] -2.50414 2.37743 -5.72888

b[(Intercept) expedition_id:BARU02303] 4.05808 0.98773 2.81271

b[(Intercept) expedition_id:BARU09102] -2.34920 2.46361 -5.67170

b[(Intercept) expedition_id:BARU10312] -2.59506 2.39015 -5.82289

b[(Intercept) expedition_id:BARU11303] 4.24257 0.69951 3.35606

b[(Intercept) expedition_id:BARU92301] 3.35737 0.77896 2.38168

b[(Intercept) expedition_id:BARU94301] -2.23281 2.47358 -5.52525

b[(Intercept) expedition_id:BARU94307] -2.64432 2.44704 -5.98007

b[(Intercept) expedition_id:BHRI86101] -2.84606 2.35327 -6.09613

b[(Intercept) expedition_id:CHAM18101] -2.13296 2.57182 -5.53569

b[(Intercept) expedition_id:CHAM91301] 2.16264 0.74801 1.19401

b[(Intercept) expedition_id:CHOY02117] 2.25046 1.01699 0.91867

b[(Intercept) expedition_id:CHOY03103] 0.86263 1.27007 -0.74262

b[(Intercept) expedition_id:CHOY03318] -2.71607 0.70731 -3.62055

b[(Intercept) expedition_id:CHOY04122] 1.73790 0.91294 0.52959

b[(Intercept) expedition_id:CHOY04302] 4.53732 1.11176 3.22077

b[(Intercept) expedition_id:CHOY06330] 3.56472 0.99278 2.32850

b[(Intercept) expedition_id:CHOY07310] 3.59713 0.91066 2.44213

b[(Intercept) expedition_id:CHOY07330] -2.54613 2.47200 -5.73410

b[(Intercept) expedition_id:CHOY07356] -2.46844 2.46461 -5.82493

b[(Intercept) expedition_id:CHOY07358] 2.36368 0.81869 1.30795

b[(Intercept) expedition_id:CHOY10328] -3.94541 2.17354 -6.74664

b[(Intercept) expedition_id:CHOY10341] -2.06983 2.60626 -5.55325

b[(Intercept) expedition_id:CHOY93304] -2.53428 2.47876 -5.93481

b[(Intercept) expedition_id:CHOY96319] 2.15476 0.84894 1.06193

b[(Intercept) expedition_id:CHOY97103] -1.60506 0.94099 -2.78598

b[(Intercept) expedition_id:CHOY98307] -0.67156 1.62200 -2.81071

b[(Intercept) expedition_id:CHOY99301] 3.21945 0.72672 2.29728

b[(Intercept) expedition_id:DHA109113] 2.47803 1.39299 0.70144

b[(Intercept) expedition_id:DHA116106] -2.75514 2.42024 -6.06898

b[(Intercept) expedition_id:DHA183302] -2.68137 2.46419 -6.04356

b[(Intercept) expedition_id:DHA193302] 1.41083 1.33568 -0.31465

b[(Intercept) expedition_id:DHA196104] -2.50787 2.47336 -5.89618

b[(Intercept) expedition_id:DHA197107] -2.26242 2.51313 -5.70526

b[(Intercept) expedition_id:DHA197307] -2.16984 2.53111 -5.56595

b[(Intercept) expedition_id:DHA198107] 1.52062 0.95932 0.29941

b[(Intercept) expedition_id:DHA198302] -2.16545 2.48544 -5.51961

b[(Intercept) expedition_id:DHAM88301] -2.27515 2.51886 -5.72877

b[(Intercept) expedition_id:DZA215301] 5.90687 1.91343 3.73474

b[(Intercept) expedition_id:EVER00116] -1.24262 1.14380 -2.66075

b[(Intercept) expedition_id:EVER00148] -2.24264 2.63015 -5.78662

b[(Intercept) expedition_id:EVER01108] -1.23053 0.95860 -2.44491

b[(Intercept) expedition_id:EVER02112] 0.13470 1.39529 -1.67925

b[(Intercept) expedition_id:EVER03124] 0.39413 1.25981 -1.22383

b[(Intercept) expedition_id:EVER03168] -1.79239 1.02179 -3.04329

b[(Intercept) expedition_id:EVER04150] 1.00525 1.38190 -0.76730

b[(Intercept) expedition_id:EVER04156] -0.01150 1.20505 -1.57930

b[(Intercept) expedition_id:EVER06133] -1.39418 0.97974 -2.57853

b[(Intercept) expedition_id:EVER06136] -0.52842 0.96092 -1.71345

b[(Intercept) expedition_id:EVER06152] -2.18320 1.45586 -4.02147

b[(Intercept) expedition_id:EVER06163] -0.44209 0.99629 -1.70323

b[(Intercept) expedition_id:EVER07107] 0.98772 1.39267 -0.76457

b[(Intercept) expedition_id:EVER07148] -1.12615 1.07818 -2.47970

b[(Intercept) expedition_id:EVER07175] -0.19697 1.02673 -1.50260

b[(Intercept) expedition_id:EVER07192] 2.55135 2.45645 -0.30989

b[(Intercept) expedition_id:EVER07194] -6.06487 1.84802 -8.58170

b[(Intercept) expedition_id:EVER08139] -0.51572 0.65713 -1.35592

b[(Intercept) expedition_id:EVER08152] 0.10731 0.97523 -1.10485

b[(Intercept) expedition_id:EVER08154] 1.08240 1.26193 -0.53868

b[(Intercept) expedition_id:EVER10121] -0.79711 1.22634 -2.31084

b[(Intercept) expedition_id:EVER10136] -1.12381 1.20661 -2.64941

b[(Intercept) expedition_id:EVER10154] -1.72121 0.70279 -2.58507

b[(Intercept) expedition_id:EVER11103] -0.43040 0.75076 -1.37400

b[(Intercept) expedition_id:EVER11134] 3.35726 2.23395 0.78463

b[(Intercept) expedition_id:EVER11144] -5.66962 1.90437 -8.17256

b[(Intercept) expedition_id:EVER11152] 3.46002 2.25145 0.84028

b[(Intercept) expedition_id:EVER12133] 2.59615 2.44363 -0.25746

b[(Intercept) expedition_id:EVER12141] 1.56961 1.55855 -0.34308

b[(Intercept) expedition_id:EVER14138] -2.07616 2.56915 -5.58432

b[(Intercept) expedition_id:EVER14165] 1.67658 1.51871 -0.25401

b[(Intercept) expedition_id:EVER15117] -3.44779 2.23474 -6.39809

b[(Intercept) expedition_id:EVER15123] -3.26395 2.28611 -6.38874

b[(Intercept) expedition_id:EVER16105] -1.29497 0.72135 -2.19193

b[(Intercept) expedition_id:EVER17123] -1.62202 0.75444 -2.55988

b[(Intercept) expedition_id:EVER17137] 1.88869 1.41177 0.13340

b[(Intercept) expedition_id:EVER18118] 1.13297 1.03975 -0.18107

b[(Intercept) expedition_id:EVER18132] 0.55218 1.50267 -1.42165

b[(Intercept) expedition_id:EVER19104] 3.50067 2.21724 1.00455

b[(Intercept) expedition_id:EVER19112] -7.10545 1.69123 -9.34800

b[(Intercept) expedition_id:EVER19120] 3.40326 2.19662 0.87558

b[(Intercept) expedition_id:EVER81101] -4.49580 2.24708 -7.48117

b[(Intercept) expedition_id:EVER86101] -2.52059 2.46650 -5.83292

b[(Intercept) expedition_id:EVER89304] -2.94335 2.30166 -6.05163

b[(Intercept) expedition_id:EVER91108] -5.19499 2.04781 -7.89789

b[(Intercept) expedition_id:EVER96302] -0.34112 1.12605 -1.83561

b[(Intercept) expedition_id:EVER97105] -4.89106 2.00601 -7.55148

b[(Intercept) expedition_id:EVER98116] -0.82013 1.30463 -2.38770

b[(Intercept) expedition_id:EVER99107] -4.83542 2.01746 -7.47788

b[(Intercept) expedition_id:EVER99113] -2.11439 1.08111 -3.46708

b[(Intercept) expedition_id:EVER99116] 0.62323 1.06335 -0.71814

b[(Intercept) expedition_id:FANG97301] -2.55724 2.49524 -5.85915

b[(Intercept) expedition_id:GAN379301] 4.82999 1.07065 3.55650

b[(Intercept) expedition_id:GAUR83101] -2.73787 2.44531 -6.03820

b[(Intercept) expedition_id:GURK03301] -2.38419 2.48927 -5.83719

b[(Intercept) expedition_id:GYAJ15101] -1.89392 2.63842 -5.45911

b[(Intercept) expedition_id:HIML03302] 1.87013 0.98935 0.63241

b[(Intercept) expedition_id:HIML08301] 1.62213 0.92275 0.42413

b[(Intercept) expedition_id:HIML08307] -2.05948 2.52706 -5.46311

b[(Intercept) expedition_id:HIML13303] 5.01627 0.89396 3.90170

b[(Intercept) expedition_id:HIML13305] -2.12441 2.52219 -5.53771

b[(Intercept) expedition_id:HIML18310] 6.13444 1.81690 4.03634

b[(Intercept) expedition_id:JANU02401] -2.33309 2.52900 -5.81345

b[(Intercept) expedition_id:JANU89401] -2.50137 2.39675 -5.75711

b[(Intercept) expedition_id:JANU97301] -2.63817 2.34921 -5.80643

b[(Intercept) expedition_id:JANU98102] -2.29479 2.60749 -5.79529

b[(Intercept) expedition_id:JETH78101] 3.04722 1.03428 1.71516

b[(Intercept) expedition_id:KABD85101] 3.23227 0.89003 2.12167

b[(Intercept) expedition_id:KAN180101] -2.25321 2.45660 -5.57622

b[(Intercept) expedition_id:KANG81101] -0.65444 0.95217 -1.89131

b[(Intercept) expedition_id:KANG94301] -2.81457 2.29015 -5.94033

b[(Intercept) expedition_id:KWAN82402] 6.01302 1.83099 3.90995

b[(Intercept) expedition_id:LANG84101] -2.57763 2.45793 -5.88549

b[(Intercept) expedition_id:LEOE05301] -2.48805 2.41866 -5.76391

b[(Intercept) expedition_id:LHOT09108] 0.49017 1.41259 -1.35811

b[(Intercept) expedition_id:LHOT88301] 0.96358 1.81097 -1.33758

b[(Intercept) expedition_id:LNJU16301] -2.21115 2.54756 -5.68762

b[(Intercept) expedition_id:LSHR90301] 1.44354 0.94455 0.22243

b[(Intercept) expedition_id:MAKA01104] -2.39303 2.55136 -5.82507

b[(Intercept) expedition_id:MAKA02102] 1.77533 0.91557 0.59526

b[(Intercept) expedition_id:MAKA02103] 4.01277 0.93763 2.87114

b[(Intercept) expedition_id:MAKA08112] -5.78147 1.98138 -8.37621

b[(Intercept) expedition_id:MAKA09108] 0.04369 1.27699 -1.63840

b[(Intercept) expedition_id:MAKA15106] -2.45891 2.36193 -5.74649

b[(Intercept) expedition_id:MAKA90306] -2.69351 2.43369 -5.97698

b[(Intercept) expedition_id:MANA06305] -2.19010 2.66128 -5.78084

b[(Intercept) expedition_id:MANA08324] -6.12757 1.83096 -8.60786

b[(Intercept) expedition_id:MANA09107] 1.09540 1.37288 -0.66396

b[(Intercept) expedition_id:MANA10319] 2.05427 0.75905 1.09071

b[(Intercept) expedition_id:MANA11106] 4.13254 1.17547 2.71277

b[(Intercept) expedition_id:MANA11318] 1.70900 1.50181 -0.17858

b[(Intercept) expedition_id:MANA17333] 4.68512 2.17516 2.11668

b[(Intercept) expedition_id:MANA18306] 0.56117 1.02102 -0.70021

b[(Intercept) expedition_id:MANA80101] -2.80372 1.48779 -4.69586

b[(Intercept) expedition_id:MANA82401] -5.70714 1.95846 -8.28752

b[(Intercept) expedition_id:MANA84101] 6.86763 1.73485 4.86400

b[(Intercept) expedition_id:MANA91301] -2.57618 2.49091 -5.98577

b[(Intercept) expedition_id:MANA97301] 2.36095 0.71477 1.45079

b[(Intercept) expedition_id:NAMP79101] -2.57539 2.38751 -5.78000

b[(Intercept) expedition_id:NEMJ83101] -3.07247 2.34057 -6.23095

b[(Intercept) expedition_id:NILC79101] 3.71212 0.92944 2.56146

b[(Intercept) expedition_id:NUPT99102] -2.06069 2.61315 -5.55042

b[(Intercept) expedition_id:OHMI89101] 1.04390 0.94223 -0.14927

b[(Intercept) expedition_id:PUMO06301] -2.48834 2.39867 -5.77160

b[(Intercept) expedition_id:PUMO80302] -2.34651 2.52391 -5.79957

b[(Intercept) expedition_id:PUMO92308] 0.91100 1.26573 -0.70110

b[(Intercept) expedition_id:PUMO96101] 2.40227 0.85084 1.31315

b[(Intercept) expedition_id:PUTH02301] 1.42460 0.94670 0.19956

b[(Intercept) expedition_id:PUTH97302] -2.17485 2.46824 -5.61945

b[(Intercept) expedition_id:SHAL05301] -2.22066 2.48735 -5.66395

b[(Intercept) expedition_id:SPHN93101] 6.97740 1.67091 5.04511

b[(Intercept) expedition_id:SYKG12301] 5.48408 1.16635 4.07766

b[(Intercept) expedition_id:TAWO83101] -2.33970 2.51099 -5.75163

b[(Intercept) expedition_id:TILI04301] -2.47153 2.43775 -5.85646

b[(Intercept) expedition_id:TILI05301] 3.00634 0.67185 2.15360

b[(Intercept) expedition_id:TILI91302] 1.66625 0.95907 0.41207

b[(Intercept) expedition_id:TILI92301] -2.79137 2.41713 -6.04761

b[(Intercept) expedition_id:TKPO02102] -2.68386 2.35963 -5.91667

b[(Intercept) expedition_id:TUKU05101] -2.19821 2.53377 -5.59160

b[(Intercept) expedition_id:TUKU16301] 7.34626 1.69095 5.40123

Sigma[expedition_id:(Intercept),(Intercept)] 13.33845 2.59298 10.23552

50% 90%

(Intercept) -1.41192 -0.82198

age -0.04775 -0.03594

oxygen_usedTRUE 5.79289 6.45734

b[(Intercept) expedition_id:AMAD03107] 2.60352 3.90450

b[(Intercept) expedition_id:AMAD03327] 5.85107 8.57738

b[(Intercept) expedition_id:AMAD05338] 4.10337 5.08953

b[(Intercept) expedition_id:AMAD06110] -1.82839 0.97721

b[(Intercept) expedition_id:AMAD06334] -2.28804 0.16739

b[(Intercept) expedition_id:AMAD07101] -2.26341 0.30262

b[(Intercept) expedition_id:AMAD07310] 4.95883 5.93282

b[(Intercept) expedition_id:AMAD07337] 2.92699 4.06368

b[(Intercept) expedition_id:AMAD07340] 3.00040 4.05665

b[(Intercept) expedition_id:AMAD09331] 3.71307 5.08337

b[(Intercept) expedition_id:AMAD09333] 3.36566 4.63363

b[(Intercept) expedition_id:AMAD11304] 3.93480 5.26917

b[(Intercept) expedition_id:AMAD11321] 6.42822 9.09689

b[(Intercept) expedition_id:AMAD11340] 5.99432 8.74311

b[(Intercept) expedition_id:AMAD12108] 4.45718 6.08586

b[(Intercept) expedition_id:AMAD12342] 4.71390 6.24749

b[(Intercept) expedition_id:AMAD14105] -1.82372 0.88862

b[(Intercept) expedition_id:AMAD14322] 2.61407 3.58390

b[(Intercept) expedition_id:AMAD16354] 4.12871 5.44188

b[(Intercept) expedition_id:AMAD17307] 2.40293 3.57466

b[(Intercept) expedition_id:AMAD81101] 4.52562 5.65835

b[(Intercept) expedition_id:AMAD84401] -2.13143 0.48100

b[(Intercept) expedition_id:AMAD89102] -1.91936 0.64199

b[(Intercept) expedition_id:AMAD93304] 3.03452 4.22036

b[(Intercept) expedition_id:AMAD98311] 6.35244 9.00501

b[(Intercept) expedition_id:AMAD98316] 3.39920 4.30832

b[(Intercept) expedition_id:AMAD98402] 4.21856 5.76427

b[(Intercept) expedition_id:ANN115106] -2.30974 0.22170

b[(Intercept) expedition_id:ANN115108] -2.19292 0.35736

b[(Intercept) expedition_id:ANN179301] -2.18305 0.33680

b[(Intercept) expedition_id:ANN185401] -2.61884 -0.20623

b[(Intercept) expedition_id:ANN190101] -2.16417 0.39680

b[(Intercept) expedition_id:ANN193302] -2.40063 0.16340

b[(Intercept) expedition_id:ANN281302] -2.59899 -0.22190

b[(Intercept) expedition_id:ANN283302] -2.05955 0.43584

b[(Intercept) expedition_id:ANN378301] -2.68386 -0.29656

b[(Intercept) expedition_id:ANN402302] -1.89174 0.76295

b[(Intercept) expedition_id:ANN405301] -1.50956 1.29538

b[(Intercept) expedition_id:ANN409201] 6.27023 8.88495

b[(Intercept) expedition_id:ANN416101] -1.86808 0.87726

b[(Intercept) expedition_id:BARU01304] -2.04732 0.62060

b[(Intercept) expedition_id:BARU02101] -2.17593 0.25221

b[(Intercept) expedition_id:BARU02303] 4.05070 5.30395

b[(Intercept) expedition_id:BARU09102] -2.00162 0.50670

b[(Intercept) expedition_id:BARU10312] -2.25827 0.16895

b[(Intercept) expedition_id:BARU11303] 4.21665 5.15335

b[(Intercept) expedition_id:BARU92301] 3.34215 4.35998

b[(Intercept) expedition_id:BARU94301] -1.90406 0.66350

b[(Intercept) expedition_id:BARU94307] -2.29813 0.19694

b[(Intercept) expedition_id:BHRI86101] -2.52021 -0.12015

b[(Intercept) expedition_id:CHAM18101] -1.79744 0.85024

b[(Intercept) expedition_id:CHAM91301] 2.17494 3.08284

b[(Intercept) expedition_id:CHOY02117] 2.29903 3.50377

b[(Intercept) expedition_id:CHOY03103] 0.98213 2.33766

b[(Intercept) expedition_id:CHOY03318] -2.72864 -1.81911

b[(Intercept) expedition_id:CHOY04122] 1.80004 2.86191

b[(Intercept) expedition_id:CHOY04302] 4.42978 5.95567

b[(Intercept) expedition_id:CHOY06330] 3.52705 4.84829

b[(Intercept) expedition_id:CHOY07310] 3.58155 4.75554

b[(Intercept) expedition_id:CHOY07330] -2.23207 0.38696

b[(Intercept) expedition_id:CHOY07356] -2.15475 0.42109

b[(Intercept) expedition_id:CHOY07358] 2.38792 3.38464

b[(Intercept) expedition_id:CHOY10328] -3.71653 -1.34706

b[(Intercept) expedition_id:CHOY10341] -1.78952 1.07661

b[(Intercept) expedition_id:CHOY93304] -2.21219 0.38625

b[(Intercept) expedition_id:CHOY96319] 2.18085 3.20424

b[(Intercept) expedition_id:CHOY97103] -1.62612 -0.38628

b[(Intercept) expedition_id:CHOY98307] -0.60162 1.32033

b[(Intercept) expedition_id:CHOY99301] 3.21349 4.14416

b[(Intercept) expedition_id:DHA109113] 2.52337 4.19736

b[(Intercept) expedition_id:DHA116106] -2.42782 0.05942

b[(Intercept) expedition_id:DHA183302] -2.35806 0.22906

b[(Intercept) expedition_id:DHA193302] 1.54021 2.99080

b[(Intercept) expedition_id:DHA196104] -2.20450 0.38921

b[(Intercept) expedition_id:DHA197107] -1.94735 0.66353

b[(Intercept) expedition_id:DHA197307] -1.81805 0.73661

b[(Intercept) expedition_id:DHA198107] 1.57332 2.68301

b[(Intercept) expedition_id:DHA198302] -1.85583 0.79508

b[(Intercept) expedition_id:DHAM88301] -1.93024 0.70582

b[(Intercept) expedition_id:DZA215301] 5.62274 8.51937

b[(Intercept) expedition_id:EVER00116] -1.29375 0.26604

b[(Intercept) expedition_id:EVER00148] -1.87023 0.77133

b[(Intercept) expedition_id:EVER01108] -1.25700 0.03744

b[(Intercept) expedition_id:EVER02112] 0.11444 1.94698

b[(Intercept) expedition_id:EVER03124] 0.37369 2.04093

b[(Intercept) expedition_id:EVER03168] -1.82813 -0.43307

b[(Intercept) expedition_id:EVER04150] 0.97591 2.81875

b[(Intercept) expedition_id:EVER04156] -0.00116 1.52459

b[(Intercept) expedition_id:EVER06133] -1.46107 -0.11852

b[(Intercept) expedition_id:EVER06136] -0.59515 0.74203

b[(Intercept) expedition_id:EVER06152] -2.16460 -0.30929

b[(Intercept) expedition_id:EVER06163] -0.48145 0.86137

b[(Intercept) expedition_id:EVER07107] 0.93200 2.81941

b[(Intercept) expedition_id:EVER07148] -1.17001 0.28781

b[(Intercept) expedition_id:EVER07175] -0.22610 1.11899

b[(Intercept) expedition_id:EVER07192] 2.23603 5.83878

b[(Intercept) expedition_id:EVER07194] -5.80114 -3.93296

b[(Intercept) expedition_id:EVER08139] -0.51858 0.33543

b[(Intercept) expedition_id:EVER08152] 0.05550 1.39783

b[(Intercept) expedition_id:EVER08154] 1.04568 2.74937

b[(Intercept) expedition_id:EVER10121] -0.84457 0.80925

b[(Intercept) expedition_id:EVER10136] -1.15700 0.44107

b[(Intercept) expedition_id:EVER10154] -1.71942 -0.81161

b[(Intercept) expedition_id:EVER11103] -0.46131 0.53649

b[(Intercept) expedition_id:EVER11134] 2.99186 6.36882

b[(Intercept) expedition_id:EVER11144] -5.45481 -3.50037

b[(Intercept) expedition_id:EVER11152] 3.11376 6.55527

b[(Intercept) expedition_id:EVER12133] 2.27028 5.94529

b[(Intercept) expedition_id:EVER12141] 1.49195 3.60985

b[(Intercept) expedition_id:EVER14138] -1.74165 0.96990

b[(Intercept) expedition_id:EVER14165] 1.61811 3.68014

b[(Intercept) expedition_id:EVER15117] -3.12642 -0.88116

b[(Intercept) expedition_id:EVER15123] -2.95575 -0.62246

b[(Intercept) expedition_id:EVER16105] -1.29851 -0.37027

b[(Intercept) expedition_id:EVER17123] -1.64254 -0.66254

b[(Intercept) expedition_id:EVER17137] 1.78487 3.72382

b[(Intercept) expedition_id:EVER18118] 1.13325 2.48055

b[(Intercept) expedition_id:EVER18132] 0.52606 2.52797

b[(Intercept) expedition_id:EVER19104] 3.16916 6.42689

b[(Intercept) expedition_id:EVER19112] -6.84926 -5.20830

b[(Intercept) expedition_id:EVER19120] 3.07646 6.36249

b[(Intercept) expedition_id:EVER81101] -4.37135 -1.80506

b[(Intercept) expedition_id:EVER86101] -2.16055 0.36308

b[(Intercept) expedition_id:EVER89304] -2.61683 -0.28986

b[(Intercept) expedition_id:EVER91108] -4.96137 -2.80011

b[(Intercept) expedition_id:EVER96302] -0.31367 1.11112

b[(Intercept) expedition_id:EVER97105] -4.64312 -2.55486

b[(Intercept) expedition_id:EVER98116] -0.92969 0.85268

b[(Intercept) expedition_id:EVER99107] -4.62386 -2.51354

b[(Intercept) expedition_id:EVER99113] -2.14146 -0.68395

b[(Intercept) expedition_id:EVER99116] 0.60054 2.02357

b[(Intercept) expedition_id:FANG97301] -2.24933 0.33341

b[(Intercept) expedition_id:GAN379301] 4.73523 6.24003

b[(Intercept) expedition_id:GAUR83101] -2.38363 0.13411

b[(Intercept) expedition_id:GURK03301] -2.09383 0.53663

b[(Intercept) expedition_id:GYAJ15101] -1.54912 1.23681

b[(Intercept) expedition_id:HIML03302] 1.92032 3.06507

b[(Intercept) expedition_id:HIML08301] 1.67346 2.74487

b[(Intercept) expedition_id:HIML08307] -1.75679 0.91221

b[(Intercept) expedition_id:HIML13303] 4.98342 6.15932

b[(Intercept) expedition_id:HIML13305] -1.78899 0.87577

b[(Intercept) expedition_id:HIML18310] 5.89126 8.59576

b[(Intercept) expedition_id:JANU02401] -2.03564 0.68094

b[(Intercept) expedition_id:JANU89401] -2.22067 0.26902

b[(Intercept) expedition_id:JANU97301] -2.35703 0.11369

b[(Intercept) expedition_id:JANU98102] -1.96793 0.79803

b[(Intercept) expedition_id:JETH78101] 3.03177 4.37319

b[(Intercept) expedition_id:KABD85101] 3.20543 4.36733

b[(Intercept) expedition_id:KAN180101] -1.93108 0.67332

b[(Intercept) expedition_id:KANG81101] -0.64019 0.53604

b[(Intercept) expedition_id:KANG94301] -2.48786 -0.17647

b[(Intercept) expedition_id:KWAN82402] 5.75913 8.36575

b[(Intercept) expedition_id:LANG84101] -2.28659 0.32833

b[(Intercept) expedition_id:LEOE05301] -2.17404 0.40218

b[(Intercept) expedition_id:LHOT09108] 0.47423 2.35159

b[(Intercept) expedition_id:LHOT88301] 0.90947 3.34501

b[(Intercept) expedition_id:LNJU16301] -1.87076 0.77245

b[(Intercept) expedition_id:LSHR90301] 1.49716 2.61088

b[(Intercept) expedition_id:MAKA01104] -2.06227 0.60099

b[(Intercept) expedition_id:MAKA02102] 1.81502 2.92835

b[(Intercept) expedition_id:MAKA02103] 3.96840 5.20634

b[(Intercept) expedition_id:MAKA08112] -5.50998 -3.54058

b[(Intercept) expedition_id:MAKA09108] 0.04397 1.69792

b[(Intercept) expedition_id:MAKA15106] -2.13195 0.29561

b[(Intercept) expedition_id:MAKA90306] -2.32791 0.12128

b[(Intercept) expedition_id:MANA06305] -1.86824 0.97669

b[(Intercept) expedition_id:MANA08324] -5.87554 -4.02942

b[(Intercept) expedition_id:MANA09107] 1.21853 2.69330

b[(Intercept) expedition_id:MANA10319] 2.07413 3.01967

b[(Intercept) expedition_id:MANA11106] 4.05689 5.68540

b[(Intercept) expedition_id:MANA11318] 1.64095 3.67266

b[(Intercept) expedition_id:MANA17333] 4.45821 7.52485

b[(Intercept) expedition_id:MANA18306] 0.49761 1.89373

b[(Intercept) expedition_id:MANA80101] -2.80639 -0.89838

b[(Intercept) expedition_id:MANA82401] -5.49871 -3.43017

b[(Intercept) expedition_id:MANA84101] 6.64794 9.19887

b[(Intercept) expedition_id:MANA91301] -2.20116 0.28774

b[(Intercept) expedition_id:MANA97301] 2.39142 3.24958

b[(Intercept) expedition_id:NAMP79101] -2.30309 0.24799

b[(Intercept) expedition_id:NEMJ83101] -2.72437 -0.39955

b[(Intercept) expedition_id:NILC79101] 3.67073 4.91033

b[(Intercept) expedition_id:NUPT99102] -1.75308 1.03903

b[(Intercept) expedition_id:OHMI89101] 1.11351 2.18579

b[(Intercept) expedition_id:PUMO06301] -2.15880 0.35458

b[(Intercept) expedition_id:PUMO80302] -1.99216 0.58421

b[(Intercept) expedition_id:PUMO92308] 1.02975 2.44220

b[(Intercept) expedition_id:PUMO96101] 2.42213 3.44336

b[(Intercept) expedition_id:PUTH02301] 1.48088 2.58479

b[(Intercept) expedition_id:PUTH97302] -1.86844 0.71155

b[(Intercept) expedition_id:SHAL05301] -1.90362 0.75929

b[(Intercept) expedition_id:SPHN93101] 6.74751 9.20353

b[(Intercept) expedition_id:SYKG12301] 5.41985 7.01032

b[(Intercept) expedition_id:TAWO83101] -2.00321 0.64331

b[(Intercept) expedition_id:TILI04301] -2.16266 0.40081

b[(Intercept) expedition_id:TILI05301] 3.01175 3.87822

b[(Intercept) expedition_id:TILI91302] 1.70879 2.82383

b[(Intercept) expedition_id:TILI92301] -2.43748 0.01766

b[(Intercept) expedition_id:TKPO02102] -2.33704 -0.00470

b[(Intercept) expedition_id:TUKU05101] -1.89147 0.83791

b[(Intercept) expedition_id:TUKU16301] 7.12427 9.64484

Sigma[expedition_id:(Intercept),(Intercept)] 13.02944 16.74825

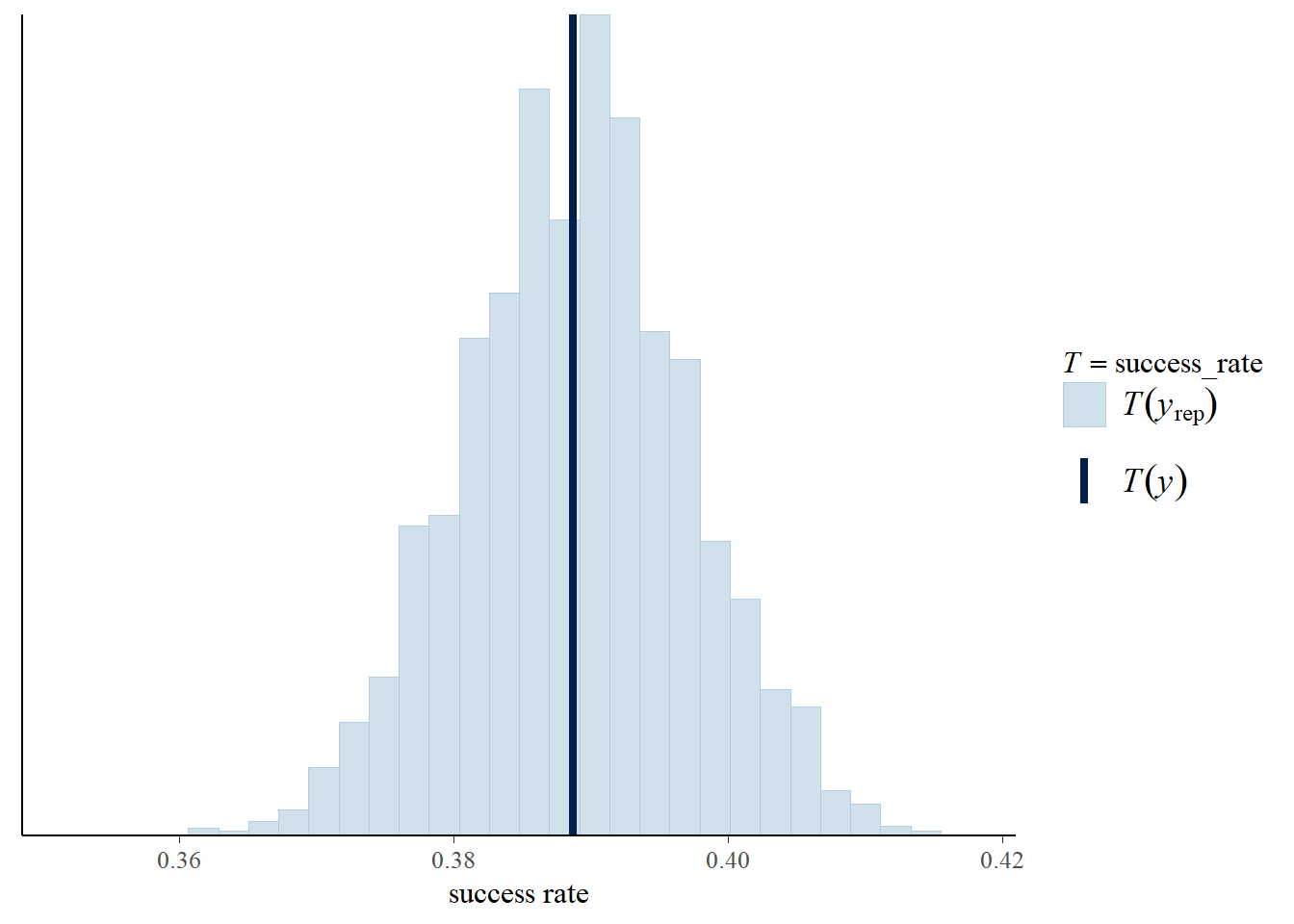

Fit Diagnostics:

mean sd 10% 50% 90%

mean_PPD 0.38865 0.00797 0.37813 0.38873 0.39884

The mean_ppd is the sample average posterior predictive distribution of the outcome variable (for details see help('summary.stanreg')).

MCMC diagnostics

mcse Rhat n_eff

(Intercept) 0.01101 1.00055 1908

age 0.00011 0.99957 6498

oxygen_usedTRUE 0.01097 1.00036 1961

b[(Intercept) expedition_id:AMAD03107] 0.01553 0.99977 4615

b[(Intercept) expedition_id:AMAD03327] 0.02795 0.99990 4470

b[(Intercept) expedition_id:AMAD05338] 0.01245 1.00005 3564

b[(Intercept) expedition_id:AMAD06110] 0.03355 0.99970 6171

b[(Intercept) expedition_id:AMAD06334] 0.03204 0.99966 5772

b[(Intercept) expedition_id:AMAD07101] 0.03266 0.99990 5759

b[(Intercept) expedition_id:AMAD07310] 0.01254 1.00029 3397

b[(Intercept) expedition_id:AMAD07337] 0.01423 1.00037 4016

b[(Intercept) expedition_id:AMAD07340] 0.01349 0.99992 3811

b[(Intercept) expedition_id:AMAD09331] 0.01471 0.99967 5047

b[(Intercept) expedition_id:AMAD09333] 0.01366 0.99940 5123

b[(Intercept) expedition_id:AMAD11304] 0.01388 0.99970 5684

b[(Intercept) expedition_id:AMAD11321] 0.02591 0.99984 4627

b[(Intercept) expedition_id:AMAD11340] 0.02772 1.00032 4392

b[(Intercept) expedition_id:AMAD12108] 0.01528 0.99958 6214

b[(Intercept) expedition_id:AMAD12342] 0.01564 1.00048 4928

b[(Intercept) expedition_id:AMAD14105] 0.03367 0.99987 5835

b[(Intercept) expedition_id:AMAD14322] 0.01356 0.99964 3118

b[(Intercept) expedition_id:AMAD16354] 0.01399 0.99945 5135

b[(Intercept) expedition_id:AMAD17307] 0.01494 1.00013 4429

b[(Intercept) expedition_id:AMAD81101] 0.01364 1.00035 3820

b[(Intercept) expedition_id:AMAD84401] 0.03392 0.99970 5669

b[(Intercept) expedition_id:AMAD89102] 0.03613 0.99959 4732

b[(Intercept) expedition_id:AMAD93304] 0.01354 0.99997 4923

b[(Intercept) expedition_id:AMAD98311] 0.02641 1.00000 4555

b[(Intercept) expedition_id:AMAD98316] 0.01173 1.00073 3432

b[(Intercept) expedition_id:AMAD98402] 0.01586 0.99943 5563

b[(Intercept) expedition_id:ANN115106] 0.03715 0.99987 4280

b[(Intercept) expedition_id:ANN115108] 0.03240 1.00005 5660

b[(Intercept) expedition_id:ANN179301] 0.03462 0.99944 5120

b[(Intercept) expedition_id:ANN185401] 0.03327 0.99957 5050

b[(Intercept) expedition_id:ANN190101] 0.03342 0.99965 5435

b[(Intercept) expedition_id:ANN193302] 0.03548 1.00021 4797

b[(Intercept) expedition_id:ANN281302] 0.03114 0.99954 5532

b[(Intercept) expedition_id:ANN283302] 0.03240 0.99958 5653

b[(Intercept) expedition_id:ANN378301] 0.03105 1.00055 5284

b[(Intercept) expedition_id:ANN402302] 0.03473 0.99947 5524

b[(Intercept) expedition_id:ANN405301] 0.03370 0.99984 6280

b[(Intercept) expedition_id:ANN409201] 0.02797 1.00059 4268

b[(Intercept) expedition_id:ANN416101] 0.03433 0.99958 5933

b[(Intercept) expedition_id:BARU01304] 0.03776 1.00072 4611

b[(Intercept) expedition_id:BARU02101] 0.03332 1.00013 5090

b[(Intercept) expedition_id:BARU02303] 0.01373 0.99970 5178

b[(Intercept) expedition_id:BARU09102] 0.03184 0.99999 5986

b[(Intercept) expedition_id:BARU10312] 0.03410 0.99997 4914

b[(Intercept) expedition_id:BARU11303] 0.01285 1.00065 2963

b[(Intercept) expedition_id:BARU92301] 0.01280 1.00046 3705

b[(Intercept) expedition_id:BARU94301] 0.03242 1.00070 5821

b[(Intercept) expedition_id:BARU94307] 0.03561 0.99979 4721

b[(Intercept) expedition_id:BHRI86101] 0.03266 0.99963 5193

b[(Intercept) expedition_id:CHAM18101] 0.03496 0.99950 5412

b[(Intercept) expedition_id:CHAM91301] 0.01272 1.00000 3456

b[(Intercept) expedition_id:CHOY02117] 0.01502 1.00018 4587

b[(Intercept) expedition_id:CHOY03103] 0.02031 1.00115 3912

b[(Intercept) expedition_id:CHOY03318] 0.01425 1.00081 2464

b[(Intercept) expedition_id:CHOY04122] 0.01331 0.99974 4703

b[(Intercept) expedition_id:CHOY04302] 0.01632 1.00040 4641

b[(Intercept) expedition_id:CHOY06330] 0.01424 1.00012 4864

b[(Intercept) expedition_id:CHOY07310] 0.01302 1.00009 4891

b[(Intercept) expedition_id:CHOY07330] 0.03297 0.99974 5620

b[(Intercept) expedition_id:CHOY07356] 0.03442 0.99975 5126

b[(Intercept) expedition_id:CHOY07358] 0.01227 0.99976 4451

b[(Intercept) expedition_id:CHOY10328] 0.02780 0.99971 6114